This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

AI generated exam answers go undetected in real-world blind test

Experienced exam markers may struggle to spot answers generated by Artificial Intelligence (AI), researchers have found.

The study was conducted at the University of Reading, UK, where university leaders are working to identify potential risks and opportunities of AI for research, teaching, learning, and assessment, with updated advice already issued to staff and students as a result of their findings.

The researchers are calling for the global education sector to follow the example of Reading, and others who are also forming new policies and guidance and do more to address this emerging issue.

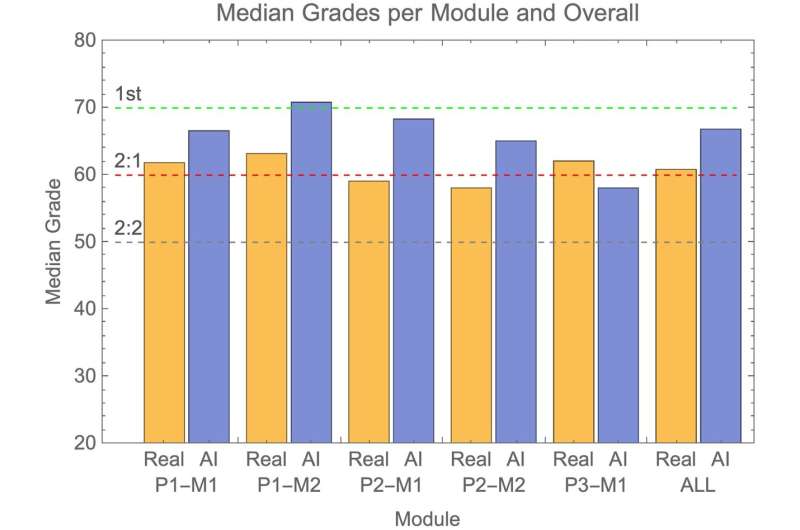

In a rigorous blind test of a real-life university examinations system, published in PLOS ONE, ChatGPT generated exam answers, submitted for several undergraduate psychology modules, went undetected in 94% of cases and, on average, attained higher grades than real student submissions.

This was the largest and most robust blind study of its kind, to date, to challenge human educators to detect AI-generated content.

Associate Professor Peter Scarfe and Professor Etienne Roesch, who led the study at Reading's School of Psychology and Clinical Language Sciences, said their findings should provide a "wakeup call" for educators across the world. A recent UNESCO survey of 450 schools and universities found that less than 10% had policies or guidance on the use of generative AI.

Dr. Scarfe said, "Many institutions have moved away from traditional exams to make assessment more inclusive. Our research shows it is of international importance to understand how AI will affect the integrity of educational assessments.

"We won't necessarily go back fully to hand-written exams, but global education sector will need to evolve in the face of AI.

"It is testament to the candid academic rigor and commitment to research integrity at Reading that we have turned the microscope on ourselves to lead in this."

Professor Roesch said, "As a sector, we need to agree how we expect students to use and acknowledge the role of AI in their work. The same is true of the wider use of AI in other areas of life to prevent a crisis of trust across society.

"Our study highlights the responsibility we have as producers and consumers of information. We need to double down on our commitment to academic and research integrity."

Professor Elizabeth McCrum, Pro-Vice-Chancellor for Education and Student Experience at the University of Reading, said, "It is clear that AI will have a transformative effect in many aspects of our lives, including how we teach students and assess their learning.

"At Reading, we have undertaken a huge program of work to consider all aspects of our teaching, including making greater use of technology to enhance student experience and boost graduate employability skills.

"Solutions include moving away from outmoded ideas of assessment and towards those that are more aligned with the skills that students will need in the workplace, including making use of AI. Sharing alternative approaches that enable students to demonstrate their knowledge and skills, with colleagues across disciplines, is vitally important.

"I am confident that through Reading's already established detailed review of all our courses, we are in a strong position to help our current and future students to learn about, and benefit from, the rapid developments in AI."

More information: A real-world test of artificial intelligence infiltration of a university examinations system: A "Turing Test" case study, PLoS ONE (2024). DOI: 10.1371/journal.pone.0305354