July 24, 2024 feature

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

New learning-based method trains robots to reliably pick up and place objects

Most robotic systems developed to date can either tackle a specific task with high precision or complete a range of simpler tasks with low precision. For instance, some industrial robots can complete specific manufacturing tasks very well but cannot easily adapt to new tasks. On the other hand, flexible robots designed to handle a variety of objects often lack the accuracy necessary to be deployed in practical settings.

This trade-off between precision and generalization has so far hindered the wide-scale deployment of general-purpose robots or, in other words, robots that can assist human users well across many different tasks. One capability that is required for tackling various real-world problems is that of "precise pick and place," which involves locating, picking up, and placing objects precisely in specific locations.

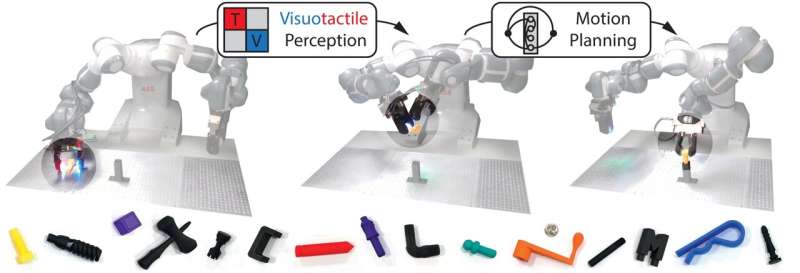

Researchers at Massachusetts Institute of Technology (MIT) recently introduced SimPLE (Simulation to Pick Localize and placE), a new learning-based, visuo-tactile method that could allow robotic systems to pick up and place a variety of objects. This method, introduced in Science Robotics, uses simulation to learn how to pick up, re-grasp, and place different objects, requiring only computer-aided designs of these objects.

"Over the course of several years working in robotic manipulation, we have closely interacted with industry partners," Maria Bauza and Antonia Bronars, first authors of the paper, told Tech Xplore. "It turns out that one of the existing challenges in automation is precise pick and place of objects. This problem is challenging as it requires a robot to transform an unstructured arrangement of objects into an organized arrangement, which can facilitate further manipulation."

Various industrial robots are already capable of picking up, grasping and putting down different objects. Yet most of these approaches only generalize across a small set of widely used objects, such as boxes, cups, or bowls and do not emphasize precision.

Bauza, Bronars and their colleagues set out to develop a new method that could allow robots to precisely pick up and place any object, relying only on simulated data. This is in contrast with many previous approaches, which learn via real-world robot interactions with different objects.

"SimPLE relies on three main components, which are developed in simulation," Bauza and Bronars said. "First, a task-aware grasping module selects an object that is stable, observable, and favorable to placing. Then, a visuo-tactile perception module fuses vision and touch to localize the object with high precision. Finally, a planning module computes the best path to the goal position, which can include handing the object off to the other arm, if necessary."

The three modules underlying the SimPLE approach ultimately allow the robotic systems to compute robust and efficient plans for manipulating varying objects with high precision. Its most notable advantage is that the robots will not need to have previously interacted with objects in the real world, which greatly speeds up their learning process.

"Our work proposes an approach to precise pick-and-place that achieves generality without requiring expensive real robot experience," Bauza and Bronars said. "It does so by utilizing simulation and known object shapes."

The researchers tested their proposed method in a series of experiments. They found that it allowed a robotic system to successfully pick and place 15 types of objects with a variety of shapes and sizes, while also outperforming baseline techniques for enabling object manipulation in robots.

Notably, this work is among the first to combine both visual and tactile information to train robots on complex manipulation tasks. The team's promising results could soon encourage other researchers to develop similar approaches for learning in simulation.

"The practical implications of this work are quite broad," Bauza and Bronars said. "SimPLE could fit well in industries where automation is already standard, such as in the automotive industry, but could also enable automation in many semi-structured environments such as medium-size factories, hospitals, medical laboratories, etc., where automation is less commonplace."

Semi-structured environments are settings that do not change drastically in terms of the general layout or structure, but can also be flexible in terms of where objects are placed or what tasks need to be performed at a given time. SimPLE could be well-suited for allowing robots to complete tasks in these environments, without requiring extensive real-world training.

-

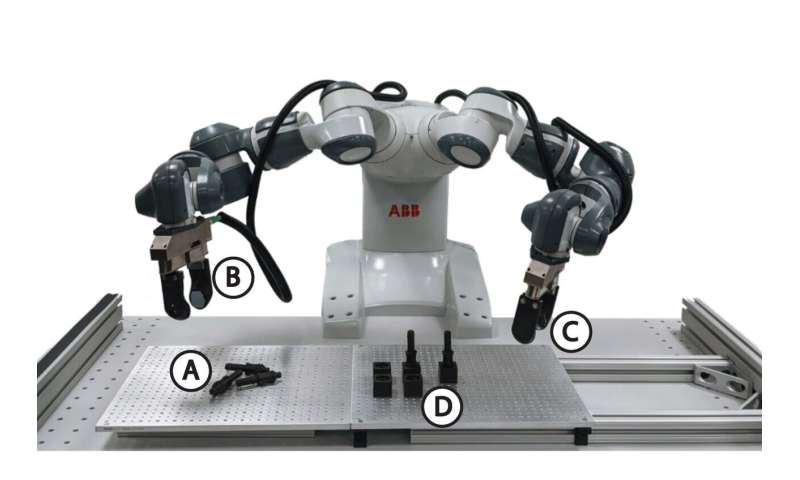

Deployment in the real world. Our approach first selects the best grasp from a set of samples on a depth image (A). The best grasp has the highest expected quality given the pose distribution estimate from vision and the precomputed grasp quality scores. Then, we executed the best grasp and updated the pose estimate, now including information from tactile in addition to the original depth image (B). Next, we took the best estimate from vision and tactile as the start pose and found a plan that leads to the goal pose using the regrasp graph if necessary (C). Last, we executed the plan (D). Credit: Maria Bauza -

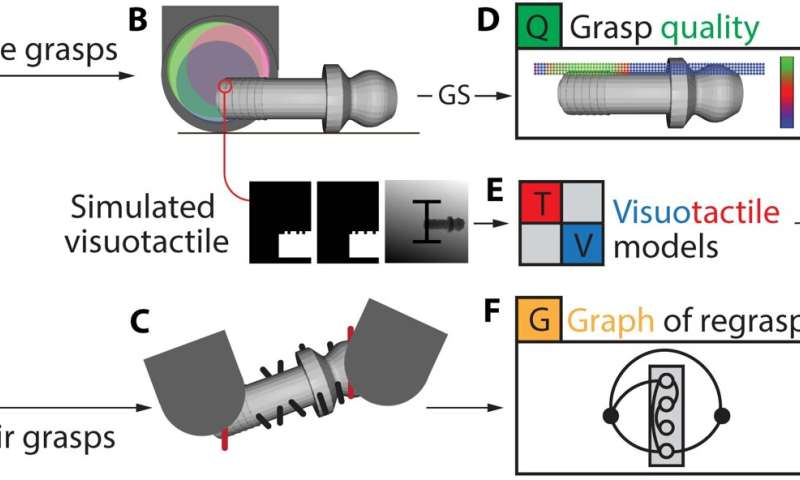

Generating models in simulation.Starting from the object’s CAD model (A), we sampled two types of grasps on the object. Table grasps (B) are accessible from the object’s resting pose on the table. For each table grasp, we simulated corresponding depth and tactile images and used these images to learn visuo-tactile perception models (E). In-air grasps (C) are accessible during regrasps. We connected in-air grasp samples that are kinematically feasible into a graph of regrasps (F). We used the visuo-tactile model and regrasp graph to compute the observability (Obs) and manipulability (Mani) of a grasp and combined these with grasp stability (GS) to evaluate the quality of each table grasp (D). Credit: Maria Bauza

"In these settings, being able to take an unstructured set of objects into a structured arrangement is an enabler for any downstream task," Bauza and Bronars explained. "For instance, an example of a pick-and-place task in a medical lab would be taking new testing tubes from a box and placing them precisely into a rack. After the tubes are arranged, they could then be placed in a machine designed to test its content or could serve other scientific purposes."

The promising method developed by this team of researchers could soon be trained on a wider range of simulated data and models of more objects, to further validate its performance and generalizability. Meanwhile, Bauza, Bronars and their colleagues are working to increase the dexterity and robustness of their proposed system.

"Two directions of future work include enhancing the dexterity of the robot to solve even more complex tasks, and providing a closed-loop solution that, instead of computing a plan, computes a policy to adapt its actions continuously based on the sensors' observations," Bauza and Bronars added.

"We made progress in the latter in TEXterity, which leverages continuous tactile information during task execution, and we plan to continue pushing dexterity and robustness for high-precision manipulation in our ongoing research."

More information: Maria Bauza et al, SimPLE, a visuotactile method learned in simulation to precisely pick, localize, regrasp, and place objects, Science Robotics (2024). DOI: 10.1126/scirobotics.adi8808

© 2024 Science X Network