This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

Reining in AI: What NZ can learn from EU regulation

Artificial Intelligence now underpins many of our activities in public and private spheres, including work, education, travel, and leisure. But its rapid development and increased use has been led primarily by commercial interests rather than deliberative policy or legislative choices.

As a result, there is increasing unease about the impact on individual and collective human rights, such as privacy and freedom of expression, as well as intellectual property.

Recently, this concern has translated into a wave of global, regional, and domestic regulatory initiatives. The European Union's Artificial Intelligence Act, expected to enter into force in the next month, is groundbreaking in scope and scale.

This act pushes past previous global and national initiatives (such as responsible AI charters and ethical AI statements) to a comprehensive regulatory framework, including an enforcement and penalties regime.

Some New Zealand-based companies may incur specific compliance requirements (if their product is available on the EU market, or affects people in the EU), but of wider importance will be the act's influence on global norms.

The EU is the largest single world market and a global standard setter. And given the closer links being forged through the New Zealand-EU Free Trade Agreement, New Zealand should monitor regulatory developments closely.

The EU AI Act is best thought of as product safety legislation, which aims to protect people from harm and promote trustworthy and safe use of AI.

Its core structure is a risk-based framework for AI, with tiered requirements based on the level of risk. High-risk AI systems (for example systems used in employment, education, and critical infrastructure) will be subject to a conformity assessment, where the provider must demonstrate compliance with requirements such as transparency and cybersecurity.

Most high-risk systems will need to be registered on a public database. Governance structures are being established at EU level and in the jurisdictions of the EU to monitor compliance, set standards for implementation, and provide expert advice.

Of global interest are the decisions about the prohibition of some types of AI (through the act's "unacceptable risk" categorization). These decisions are likely to shape the evolving global conversation on whether some AI systems should be completely prohibited as being incompatible with fundamental rights and human dignity.

Systems the act will prohibit include social scoring, scraping of images, and most types of emotion recognition, biometric categorization, and predictive policing applications.

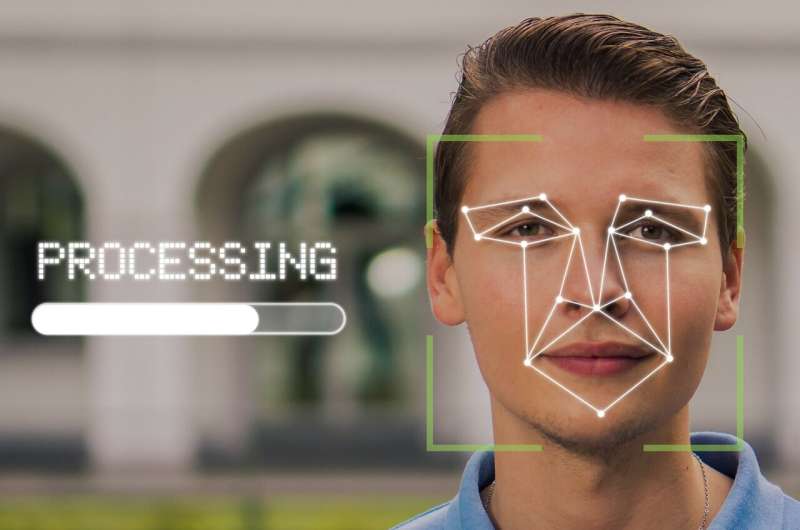

Yet, despite concerted action from civil liberties groups, the regulation stops short of a complete prohibition on real-time remote biometric surveillance—live facial recognition technology being the most familiar example.

Though this type of surveillance is in the "unacceptable risk" category, there are significant carve-outs for law enforcement purposes, as explored in Laws. Plus, national security, defense, and military purposes are outside the remit of the act. This means the technology will continue to be used.

There is also a significant risk that by defining instances where real-time remote biometric surveillance is justified, the EU may be seen to be endorsing the technology as acceptable, perhaps leading to increased use in national jurisdictions without the necessary community endorsement or engagement with particularly affected groups.

In the global jostle to host and develop technology hubs, there is considerable debate as to what regulatory settings best promote innovation in AI. Some contend that the EU's approach could strangle innovation, but others would say that certainty and bright lines provide a firm base for investment and innovation.

Overall, the EU approach is premised on the idea that where consumers have trust in systems, and confidence in quality, they are more likely to be willing to use AI in the commercial sense and in engaging with public services.

So what can New Zealand learn from the EU's approach?

New Zealand has significant gaps in its regulatory regime that may hamper innovation and endanger public trust in AI.

There is a comparatively weak privacy and data protection regime, a lack of accessible avenues for individuals and communities to know when AI is used or raise complaints about the impact of AI and similar technologies on their rights and interests, a lack of robust enforcement or penalty mechanisms, and an outdated legislative regime for state surveillance.

Without a people-centered, trustworthy, and robust regulatory framework, uptake and trust in AI is likely to be affected. Though Aotearoa New Zealand has a unique societal and cultural context requiring a tailored approach, the concepts and framework in the EU AI Act provide a firm foundation for people-centered regulation of AI.

More information: Nessa Lynch, Facial Recognition Technology in Policing and Security—Case Studies in Regulation, Laws (2024). DOI: 10.3390/laws13030035