This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

preprint

trusted source

proofread

Smart diagnostics: Possible uses of generative AI to empower nuclear plant operators

Imagine being able not only to detect a fault in a complex system but also to receive a clear, understandable explanation of its cause. Just like having a seasoned expert by your side. This is the promise of combining a large language model (LLM) such as GPT-4 with advanced diagnostic tools.

In a paper posted to the arXiv preprint server, engineers at the U.S. Department of Energy's (DOE) Argonne National Laboratory explore how this novel idea could improve operators' understanding and trusting of diagnostic information in complex systems like nuclear power plants.

The goal is to help operators make better decisions when something goes wrong by explaining in human understandable terms what is wrong and why and how it is wrong.

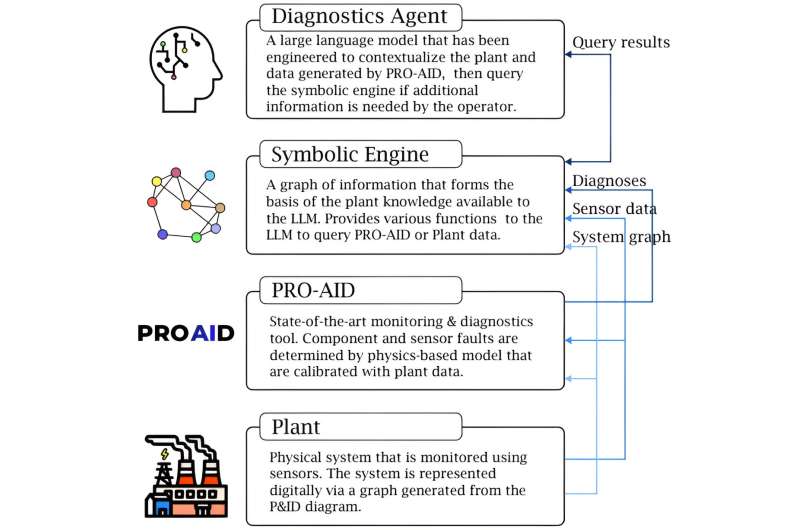

Argonne engineers combined three elements: an Argonne diagnostic tool called PRO-AID, a symbolic engine and an LLM to achieve this. The diagnostic tool uses facility data and physics-based models to identify faults.

The symbolic engine acts as an intermediary between PRO-AID and the LLM. It creates a structured representation of the fault reasoning process and constrains the output space for the LLM, which acts to eliminate hallucinations. Then, the LLM explains these faults in a way that operators can understand.

"The system has the potential to enhance the training of our nuclear workforce and streamline operations and maintenance tasks," says Rick Vilim, manager of the Plant Analysis and Control and Sensors department at Argonne.

PRO-AID works by comparing real-time data from the plant to expected normal behaviors. When there's a mismatch, it indicates a fault. This process involves using models that simulate the plant's components and how they should normally behave. If something doesn't match, there's a problem, and PRO-AID provides a probabilistic distribution of faults based on these mismatches.

A key challenge with LLMs is ensuring they provide accurate information. The authors address this by designing a symbolic engine to manage the information the LLM uses, ensuring it only provides explanations based on the data and models.

The LLM is used to explain the results from PRO-AID. It takes complex technical data and translates it into easy-to-understand language. This helps operators understand the cause of the fault and the reasoning behind the diagnosis. Additionally, using natural language, the operators can use the LLM to inquire arbitrarily about the system and sensor measurements.

The system was tested at Argonne's Mechanisms Engineering Test Loop Facility (METL), the nation's largest liquid metal test facility where small- and medium-sized components are tested for use in advanced, sodium-cooled nuclear reactors.

The system diagnosed a faulty sensor and explained the issue to the operators. This demonstrates that combining a diagnostic tool with an LLM can effectively provide understandable and trustworthy explanations for faults in complex systems.

More information: Akshay J. Dave et al, Integrating LLMs for Explainable Fault Diagnosis in Complex Systems, arXiv (2024). DOI: 10.48550/arxiv.2402.06695