Large sequence models for sequential decision-making

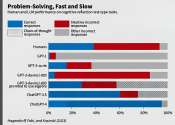

Transformer architectures have facilitated the development of large-scale and general-purpose sequence models for prediction tasks in natural language processing and computer vision, for example, GPT-3 and Swin Transformer.

Dec 15, 2023

0

2