July 17, 2015 report

Robots pass 'wise-men puzzle' to show a degree of self-awareness

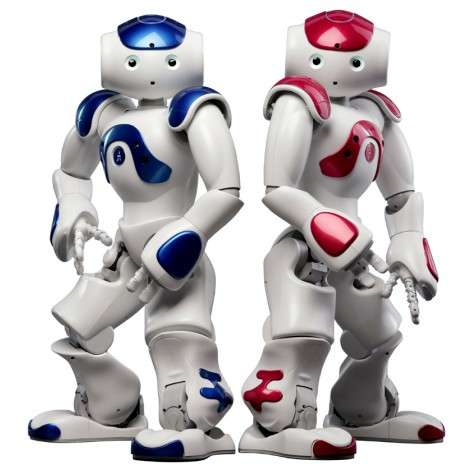

A trio of Nao robots has passed a modified version of the "wise man puzzle" and in so doing have taken another step towards demonstrating self-awareness in robotics. The feat was demonstrated at the Rensselaer Polytechnic Institute in New York to the press prior to a presentation to be given at next month's RO-MAN conference in Kobe, Japan.

The wise-men puzzle is a classic test of self awareness, it goes like this: A king is looking for a new wise man for counsel so he calls three of the wisest men around to his quarters. There he places a hat on the head of each of the men from behind so they cannot see it. He then tells them that each hat is either blue or white and that the contest is being done fairly, and that the first man to deduce the color of the hat on his own head wins. The only way the contest could be conducted fairly would be for all three to have the same color hat, thus, the first man to note the color of the hats on the other two men and declare his to be the same color, would win.

With the robots, instead of hats, the roboticists programmed the three humanoid robots to "believe" that two of them have been given a "dumbing pill" causing them to become mute, but they did not "know" which of them it was. In actuality, two of them were made mute by pressing a button on their head. The three robots were then asked which of them had not received the dumbing pill. All three robots attempted to respond with an answer of "I don't know" but only one was able to do so, which meant it was the one that had not been muted. Upon hearing itself audibilize a reply, it changed its answer, declaring that it was the one that had not received the dumbing pill.

This little exercise by the three robots shows that some degree of self-awareness can be achieved by robots, and represents a big step forward in achieving more lofty goals. The research team, represented by Selmer Bringsjord told those in attendance that incrementally adding abilities such as the team demonstrated will over time lead to robots with more useful attributes. He and his team, he notes, are not concerned about questions of consciousness, but instead want to build robots that are capable of doing things that might be considered examples of conscience behavior.

More information: Moral Reasoning & Decision-Making: ONR: MURI/Moral Dilemmas: rair.cogsci.rpi.edu/projects/muri/

© 2015 Tech Xplore