World's first 1,000-processor chip

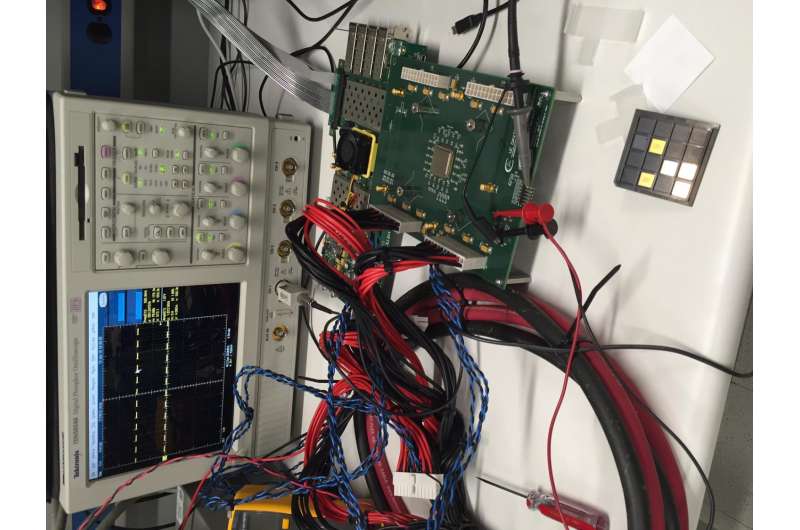

A microchip containing 1,000 independent programmable processors has been designed by a team at the University of California, Davis, Department of Electrical and Computer Engineering. The energy-efficient "KiloCore" chip has a maximum computation rate of 1.78 trillion instructions per second and contains 621 million transistors. The KiloCore was presented at the 2016 Symposium on VLSI Technology and Circuits in Honolulu on June 16.

"To the best of our knowledge, it is the world's first 1,000-processor chip and it is the highest clock-rate processor ever designed in a university," said Bevan Baas, professor of electrical and computer engineering, who led the team that designed the chip architecture. While other multiple-processor chips have been created, none exceed about 300 processors, according to an analysis by Baas' team. Most were created for research purposes and few are sold commercially. The KiloCore chip was fabricated by IBM using their 32 nm CMOS technology.

Each processor core can run its own small program independently of the others, which is a fundamentally more flexible approach than so-called Single-Instruction-Multiple-Data approaches utilized by processors such as GPUs; the idea is to break an application up into many small pieces, each of which can run in parallel on different processors, enabling high throughput with lower energy use, Baas said.

Because each processor is independently clocked, it can shut itself down to further save energy when not needed, said graduate student Brent Bohnenstiehl, who developed the principal architecture. Cores operate at an average maximum clock frequency of 1.78 GHz, and they transfer data directly to each other rather than using a pooled memory area that can become a bottleneck for data.

The chip is the most energy-efficient "many-core" processor ever reported, Baas said. For example, the 1,000 processors can execute 115 billion instructions per second while dissipating only 0.7 Watts, low enough to be powered by a single AA battery. The KiloCore chip executes instructions more than 100 times more efficiently than a modern laptop processor.

Applications already developed for the chip include wireless coding/decoding, video processing, encryption, and others involving large amounts of parallel data such as scientific data applications and datacenter record processing.

The team has completed a compiler and automatic program mapping tools for use in programming the chip.