October 13, 2016 report

Google DeepMind project taking neural networks to a new level

(Tech Xplore)—A team of researchers at Google's DeepMind Technologies has been working on a means to increase the capabilities of computers by combining aspects of data processing and artificial intelligence and have come up with what they are calling a differentiable neural computer (DNC.) In their paper published in the journal Nature, they describe the work they are doing and where they believe it is headed. To make the work more accessible to the public team members, Alexander Graves and Greg Wayne have posted an explanatory page on the DeepMind website.

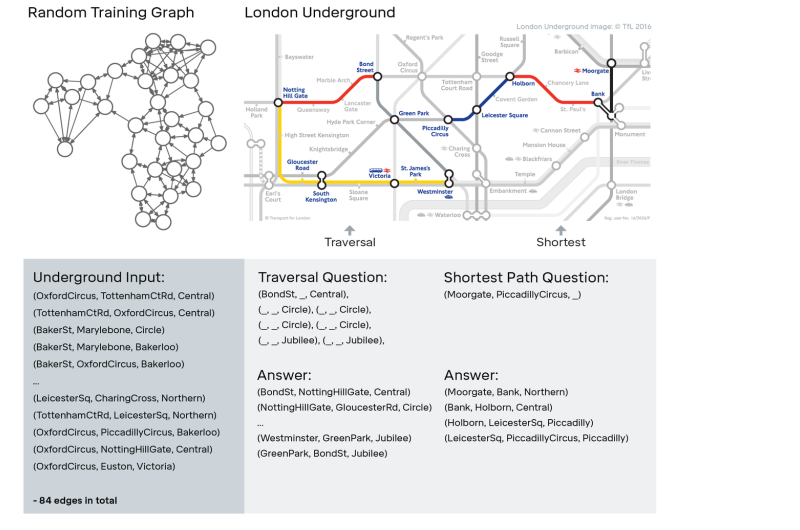

DeepMind is a Google-owned company that does research on artificial intelligence, including neural networks, and more recently, deep neural networks, which are computer systems that learn how to do things by seeing many other examples. But, as Graves and Wayne note, such systems are typically limited by their ability to use and manipulate memory in useful ways because they are in essence based on decision trees. The work being done with DNCs is meant to overcome that deficiency, allowing for the creation of computer systems that are not only able to learn, but which will be able to remember what they have learned and then to use that information for decision making when faced with a new task. The researchers highlight an example of how such a system might be of greater use to human operators—a DNC could be taught how to get from one point to another, for example, and then caused to remember what it learned along the way. That would allow for the creation of a system that offers the best route to take on the subway, perhaps, or on a grander scale, advice on adding roads to a city.

By adding memory access to neural networking, the researchers are also looking to take advantage of another ability we humans take for granted—forming relationships between memories, particularly as they relate to time. One example would be when a person walks by a candy store and the aroma immediately takes them back to their childhood—to Christmas, perhaps, and the emotions that surround the holiday season. A computer able to make the same sorts of connections would be able to make similar leaps, jumping back to a sequence of connected learning events that could be useful in providing an answer to a problem about a certain topic—such as what caused the Great Depression or how Google became so successful.

The research team has not yet revealed if there are any plans in place for actually using the systems they are developing, but it would seem likely, and it might be gradual, showing up in better search results when using Google, for example.

More information: Alex Graves et al. Hybrid computing using a neural network with dynamic external memory, Nature (2016). DOI: 10.1038/nature20101

Abstract

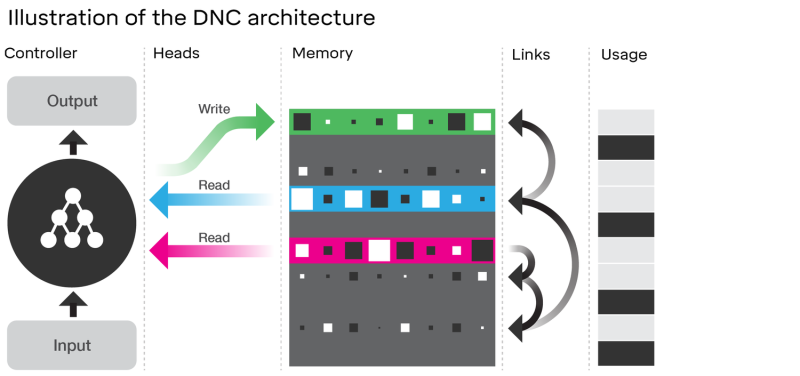

Artificial neural networks are remarkably adept at sensory processing, sequence learning and reinforcement learning, but are limited in their ability to represent variables and data structures and to store data over long timescales, owing to the lack of an external memory. Here we introduce a machine learning model called a differentiable neural computer (DNC), which consists of a neural network that can read from and write to an external memory matrix, analogous to the random-access memory in a conventional computer. Like a conventional computer, it can use its memory to represent and manipulate complex data structures, but, like a neural network, it can learn to do so from data. When trained with supervised learning, we demonstrate that a DNC can successfully answer synthetic questions designed to emulate reasoning and inference problems in natural language. We show that it can learn tasks such as finding the shortest path between specified points and inferring the missing links in randomly generated graphs, and then generalize these tasks to specific graphs such as transport networks and family trees. When trained with reinforcement learning, a DNC can complete a moving blocks puzzle in which changing goals are specified by sequences of symbols. Taken together, our results demonstrate that DNCs have the capacity to solve complex, structured tasks that are inaccessible to neural networks without external read–write memory.

© 2016 Tech Xplore