January 17, 2019 report

Using a machine learning technique to make a canine-like robot more agile and faster

A team of researchers with Robotic Systems Lab in Switzerland and Intelligent Systems Lab in Germany and the U.S. has found a way to apply machine learning to robotics to give such machines greater abilities. In their paper published in the journal Science Robotics, the group describes how they applied machine learning to robotics and in so doing gave a canine-like robot more agility and speed.

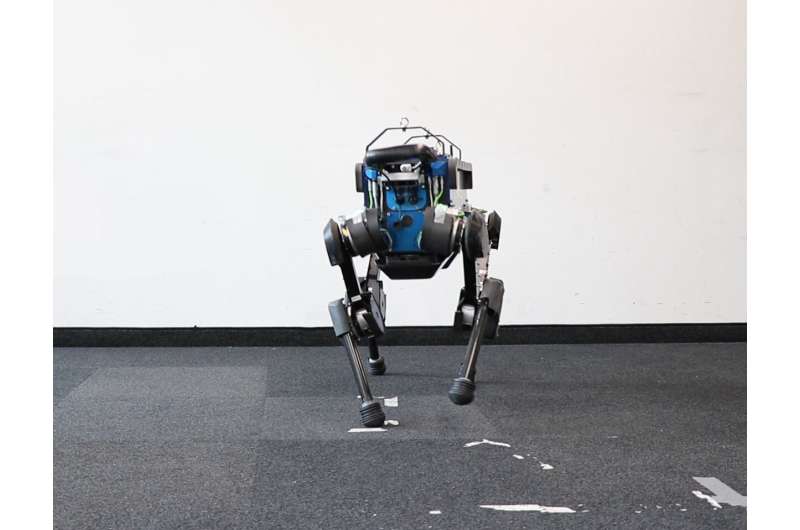

Machine learning has been in the news a lot lately, as such systems continue to creep up on human abilities giving them unprecedented capabilities. In this new effort, the researchers sought to apply some of that same improvement to a dog-like robot called ANYmal—an untethered machine about the size of a large dog that can walk around in ways very closely resembling those of a real animal. ANYmal was originally created by a team at Robotic Systems Lab and has been commercialized. Now the company has partnered with Intelligent Systems Lab to give the robot an ability to learn how to do the things it does through practice, rather than through programming.

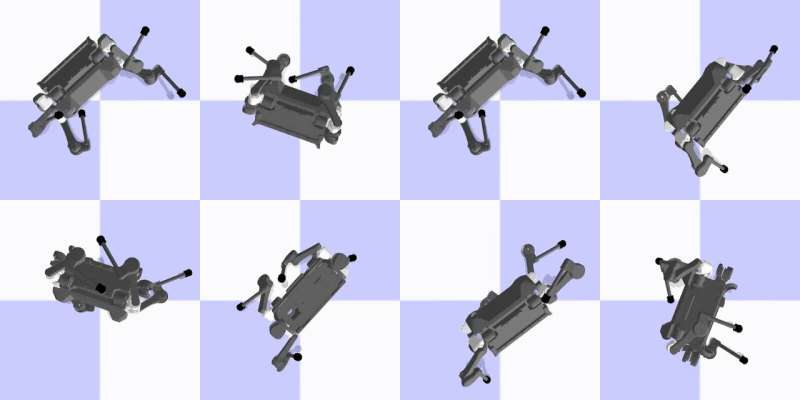

Machine learning works by setting up goals for a system and then giving it a means for testing ways to achieve those goals—continually improving as benchmarks are reached. The testing is done over and over, sometimes thousands of times. Such testing is difficult with a robot both because of the many factors involved (such as all of the attributes involved in maintaining balance) and because of the huge time investment. After working out a way to tackle the first problem, the researchers found a way around the second. Instead of having ANYmal struggle through its learning regimen in the real world, the researchers created a virtual version of the robot that could run on a simple desktop computer.

The researchers note that allowing the robot to learn while in its virtual incarnation was approximately 1000 times faster than it would have been in the real world. They let the virtual dog train itself for up to 11 hours and then downloaded the results to the physical robot. Testing showed the approach worked very well. The new and improved version of ANYmal was more agile (able to prevent a human from kicking it over and could right itself if it did fall) and it ran approximately 25 percent faster.

More information: Jemin Hwangbo et al. Learning agile and dynamic motor skills for legged robots, Science Robotics (2019). DOI: 10.1126/scirobotics.aau5872

© 2019 Science X Network