Inferring what we share by how we share

It's getting harder for people to decipher real information from fake information online. But patterns in the ways in which information is spread over the internet—say, from user to user on a social media network—may serve as an indication of whether the information is authentic or not.

Those are the findings of a new study by researchers from Carnegie Mellon University's CyLab.

"The challenge with misinformation is that artificial intelligence has advanced to a level where Twitter bots and deepfakes are muddling humans' ability to decipher truth from fiction," says CyLab's Conrad Tucker, a professor of mechanical engineering and the principle investigator of the new study. "Rather than relying on humans to determine whether something is authentic or not, we wanted to see if the network on which information is spread could be used to determine its authenticity."

The study was published in last week's edition of Scientific Reports. Tucker's Ph.D. student, Sakthi Prakash, was the study's first author.

"This study has been a long time coming," says Tucker.

To study how real and fake information flows through a social network, studying real Twitter data may seem like the obvious choice. But what the researchers needed was data capturing how people connected, shared and liked content over the entire span of a social media network's existence.

"If you look at Twitter right now, you'd be looking at an instant in time when people have already connected," says Tucker. "We wanted to look at the beginning—at the start of a network—at data that is difficult to attain if you're not the owner or creator of the platform."

Because of these constraints, the researchers built a Twitter-like social media network and asked study participants to use it for two days. The researchers populated the social network with 20 authentic and 20 fake videos collected from verified sources of each, but users were not aware of the authenticity of the videos. Then, over the course of 48 hours, 620 participants joined, began following each other, shared and liked the videos on this simulated social media network.

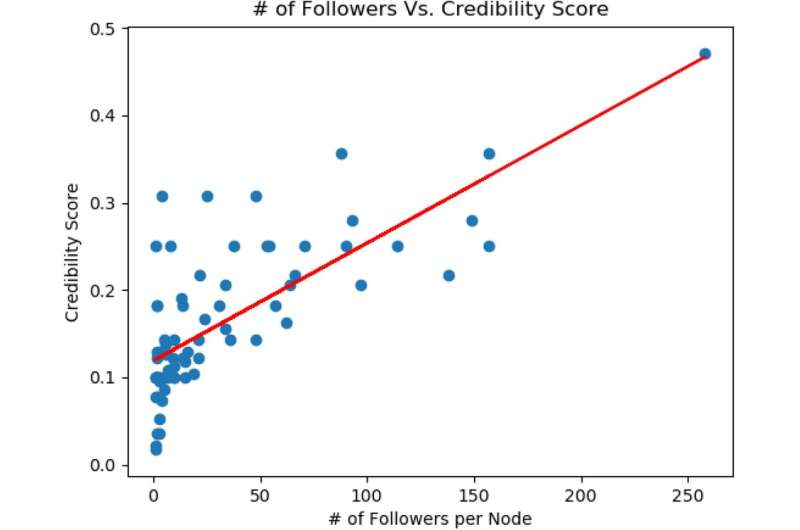

To encourage participation, study participants were incentivized: The user with the most followers at the end of the two-day study was awarded a $100 cash prize. However, all users were given a credibility score that everyone on the network could see. If users shared too much content that the researchers knew was fake, their credibility score would take a hit.

This simulated Twitter-like social media network is the first of its kind, Tucker says, and is open-source for other researchers to use for their own purposes.

"Our goal was to derive a relationship between user credibility, post likes and the probability of one user establishing a connection with another user," says Tucker.

It turns out that this relationship proves useful in inferring whether or not misinformation is being shared on a particular social media network, even if the content itself being shared is unknown. In other words, the patterns in which information is shared across a network—with whom it is shared, the amount of likes it receives, etc.—can be used to infer the information's authenticity.

"Now, rather than relying on humans themselves to identify misinformation, we may be able to rely on the network of humans, even if we don't know what they are sharing," says Tucker. "By looking at the way in which information is being shared, we can begin to infer what is being shared.

"As the world advances towards more cyber-physical systems, quantifying the veracity of information is going to be critical," says Tucker.

Given that current mechanical systems are becoming more connected, Tucker highlights the opportunity for mechanical engineering researchers to play a critical role in understanding how the systems that they create impact people, places, and policy.

"We had mechanical systems that evolved to electro-mechanical, then to systems that collect data," says Tucker. "Since people are part of this network, you have people who interact with these systems, and you have asocial science component of that, and we need to understand these vulnerabilities as it relates to our mechanical and engineering systems."

More information: The study was funded by the Air Force Office of Scientific Research (AFOSR) (FA9550-18-1-0108). Sakthi Kumar Arul Prakash et al. Classification of unlabeled online media, Scientific Reports (2021). DOI: 10.1038/s41598-021-85608-5