AI-designed structured material creates super-resolution images using a low-resolution display

One of the promising technologies being developed for next-generation augmented/virtual reality (AR/VR) systems is holographic image displays that use coherent light illumination to emulate the 3D optical waves representing, for example, the objects within a scene. These holographic image displays can potentially simplify the optical setup of a wearable display, leading to compact and lightweight form factors.

On the other hand, an ideal AR/VR experience requires relatively high-resolution images to be formed within a large field-of-view to match the resolution and the viewing angles of the human eye. However, the capabilities of holographic image projection systems are restricted mainly due to the limited number of independently controllable pixels in existing image projectors and spatial light modulators.

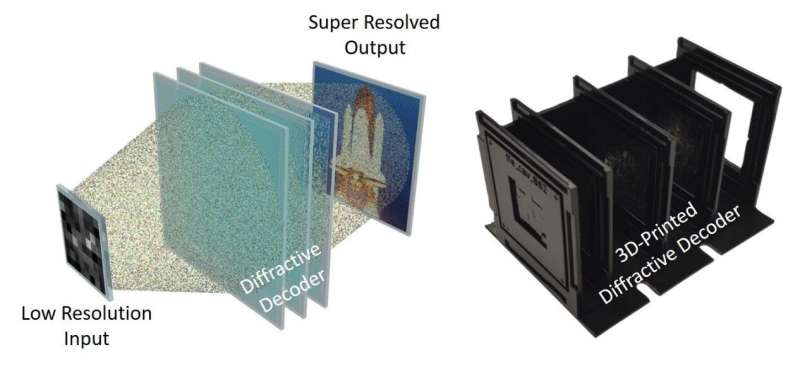

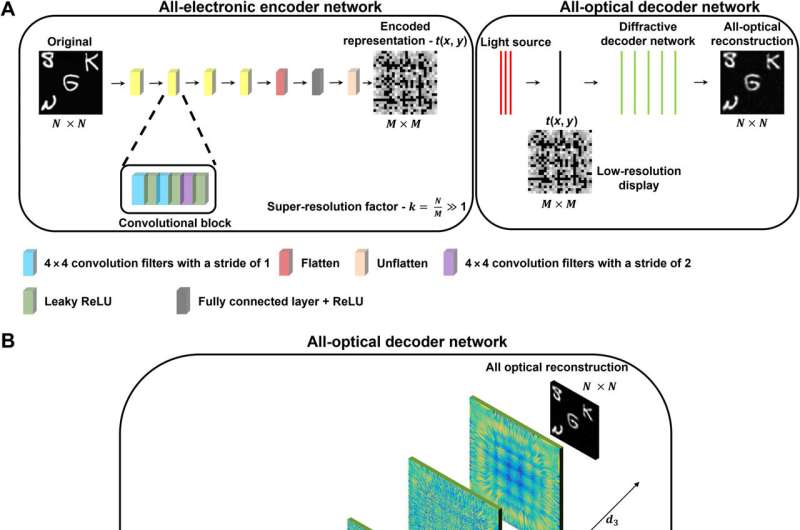

A recent study published in Science Advances reported a deep learning-designed transmissive material that can project super-resolved images using low-resolution image displays. In their paper titled "Super-resolution image display using diffractive decoders," UCLA researchers, led by Professor Aydogan Ozcan, used deep learning to spatially-engineer transmissive diffractive layers at the wavelength scale, and created a material-based physical image decoder that achieves super-resolution image projection as the light is transmitted through its layers.

Imagine there is a stream of high-resolution images waiting in the cloud or your local PC to be sent to your head-mounted or wearable display for your visualization. Instead of sending these high-resolution images to your wearable display, this new technology first runs them through a digital neural network (the encoder) to compress them into lower-resolution images that look like bar-codes, not meaningful to the human eye.

However, this image compression is unlike other digital image compression methods because it is not decoded or decompressed in a computer. Instead, a transmissive material-based diffractive decoder decompresses these lower-resolution images all optically and projects the desired high-resolution images as the light from the low-resolution display passes through thin layers of the diffractive decoder. Therefore, the image decompression from low to high resolution is completed using only light diffraction through a passive and thin structured material, making the whole process extremely fast since the transparent diffractive decoder could be as thin as a stamp.

In addition to being ultra-fast, this diffractive image decoding scheme is also energy-efficient since the image decompression process follows light diffraction through a passive material and does not consume power except for the illumination light.

The UCLA research team showed that these diffractive decoders designed by deep learning can achieve a super-resolution factor of ~4 in each lateral direction of an image, corresponding to a ~16-fold increase in the effective number of useful pixels in the projected images.

In addition to improving the resolution of the projected images, this diffractive image display also provides a significant decrease in data transmission and storage requirements thanks to encoding the high-resolution images into compact optical representations with a lower number of pixels, which significantly reduces the amount of information that needs to be transmitted to a wearable display.

The research team has experimentally demonstrated their diffractive super-resolution image display using 3D-printed diffractive decoders that operate at the terahertz part of the electromagnetic spectrum, which is frequently used in, for example, security image scanners at airports. Researchers also reported that the super-resolution capabilities of the presented diffractive decoders could be extended to project color images with red, green and blue wavelengths.

The principal investigator of the research, Professor Aydogan Ozcan, said, "This diffractive super-resolution image display design will inspire display solutions with enhanced resolution, potentially forming the building blocks of next-generation 3D display technology including, for example, head-mounted devices."

The other co-authors of this work include Professor Mona Jarrahi, Northrop Grumman Endowed Chair of Electrical and Computer Engineering at UCLA, and graduate students Cagatay Isil, Deniz Mengu, Yifan Zhao, Anika Tabassum, Jingxi Li and Yi Luo, all with UCLA.

More information: Çağatay Işıl et al, Super-resolution image display using diffractive decoders, Science Advances (2022). DOI: 10.1126/sciadv.add3433