January 18, 2023 feature

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

An imitation-relaxation reinforcement learning framework for four-legged robot locomotion

For legged robots to effectively explore their surroundings and complete missions, they need to be able to move both rapidly and reliably. In recent years, roboticists and computer scientists have created various models for the locomotion of legged robots, many of which are trained using reinforcement learning methods.

The effective locomotion of legged robots entails solving several different problems. These include ensuring that the robots maintain their balance, that they move most efficiently, that they periodically alternate their leg movements to produce a particular gait and that they can follow commands.

While some approaches for legged robot locomotion have achieved promising results, many are unable to consistently tackle all these problems. When they do, they sometimes struggle to achieve high speeds, thus only allowing robots to move slowly.

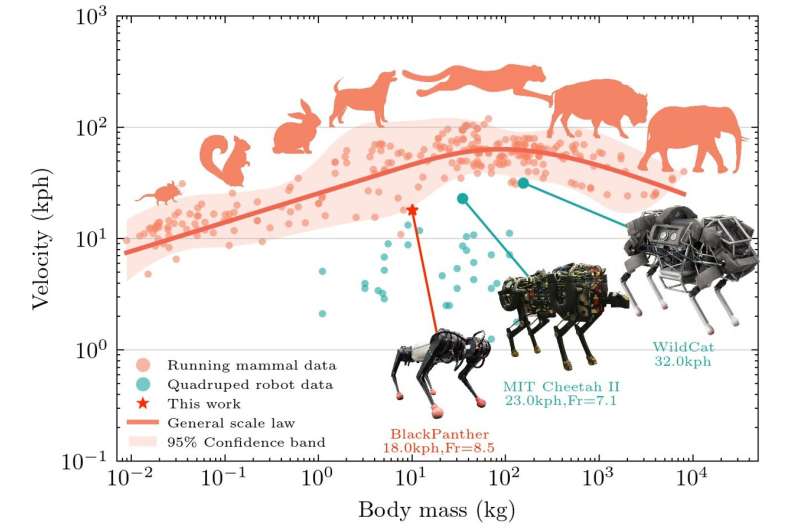

Researchers at Zhejiang University and the ZJU-Hangzhou Global Scientific and Technological Center have recently created a new framework that could allow four-legged robots to move efficiently and at high speeds. This framework, introduced in in Nature Machine Intelligence, is based on a training method known as imitation-relaxation reinforcement learning (IRRL).

"Allowing robots to catch up to bio-mobility is my dream research goal," Jin Yongbin, one of the researchers who carried out the study, told TechXplore. "In its implementation, our idea was inspired by the interdisciplinary communication between computer graphics, material science and mechanics. The characteristic hyperplane is inspired by the ternary phase diagram in materials science."

In contrast with conventional reinforcement learning methods, the approach proposed by Yongbin and his colleagues optimizes the different objectives of legged robot locomotion in stages. In addition, when assessing the robustness of their system, the researchers introduced the notion of "stochastic stability," a measure that they hoped would better reflect how a robot would perform in real-world environments (i.e., as opposed to in simulations).

"We try to understand the characteristics of different sub-reward functions, and then reshape the final reward function to avoid the influence of local extremum," Yongbin explained. "From another perspective, the effectiveness of this method lies in the easy-to-hard learning process. Motion imitation provides a good initial estimate for the optimal solution."

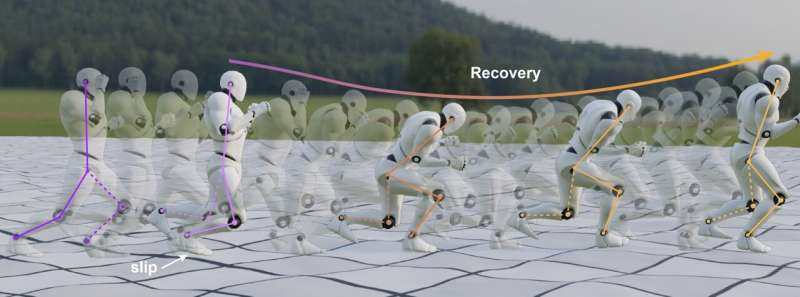

The researchers evaluated their approach in a series of tests, both in simulations of a four-legged robot and by running their stochastic stability analysis. They found that it allowed the four-legged robot, which resembles the renowned Mini-Cheetah robot created by MIT, to run at a speed of 5.0 m/s-1, without losing its balance.

"I think there are two main contributions of this work," Yongbin said. "The first is the proposed hyper plane method, which helps us to explore the nature of reward in the ultra-high-dimensional parameter space, thereby guiding the design of reward for RL-based controller. The second is the quantitative stability evaluation method which try to bridge the sim-to-real gap."

The framework introduced by this team of researchers could soon be implemented and evaluated in different real-world settings, using various physical legged robots. Ultimately, it could help to improve the locomotion of both existing and newly created legged robots, allowing them to move faster, complete missions in a smaller amount of time, and reach target locations more efficiently.

"So far, the entropy-based stability metric is a posteriori method," Yongbin added. "In the future, we will directly introduce stability indicators in the process of controller learning and strive to catch up with the agility of natural creatures."

More information: Yongbin Jin et al, High-speed quadrupedal locomotion by imitation-relaxation reinforcement learning, Nature Machine Intelligence (2022). DOI: 10.1038/s42256-022-00576-3.

© 2023 Science X Network