June 18, 2020 feature

Teaching humanoid robots different locomotion behaviors using human demonstrations

In recent years, many research teams worldwide have been developing and evaluating techniques to enable different locomotion styles in legged robots. One way of training robots to walk like humans or animals is by having them analyze and emulate real-world demonstrations. This approach is known as imitation learning.

Researchers at the University of Edinburgh in Scotland have recently devised a framework for training humanoid robots to walk like humans using human demonstrations. This new framework, presented in a paper pre-published on arXiv, combines imitation learning and deep reinforcement learning techniques with theories of robotic control, in order to achieve natural and dynamic locomotion in humanoid robots.

"The key question we set out to investigate was how to incorporate (1) useful human knowledge in robot locomotion and (2) human motion capture data for imitation into deep reinforcement learning paradigm to advance the autonomous capabilities of legged robots more efficiently," Chuanyu Yang, one of the researchers who carried out the study, told TechXplore. We proposed two methods of introducing human prior knowledge into a DRL framework."

The framework devised by Yang and his colleagues is based on a unique reward design that uses motion caption data of humans walking as training references. In addition, it utilizes two specialized hierarchical neural architectures, namely a phase functioned neural network (PFNN) and a mode adaptive neural network (MANN).

"The key to replicating human-like locomotion styles is to introduce human walking data as an expert demonstration for the learning agent to imitate," Yang explained. "Reward design is an important aspect of reinforcement learning, as it governs the behavior of the agent."

The reward design used by Yang and his colleagues consists in a task term ad an imitation term. The first of these components offers the guidance necessary for a humanoid robot to achieve high-level locomotion, while the latter enables more human-like and natural walking patterns. This unique design is aligned with key theoretical concepts behind other conventional humanoid control approaches.

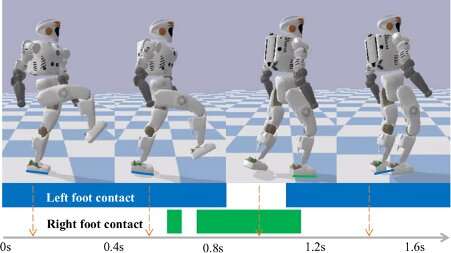

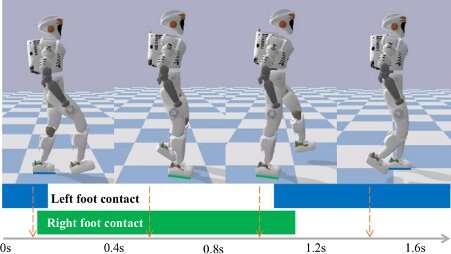

The researchers evaluated their imitation learning framework in a series of experiments conducted in simulated environments. They found that it was able to produce robust locomotion behaviors in a variety of scenarios, even in the presence of disturbances or undesirable factors, such as terrain irregularities or external pushes.

"By leveraging human walking motions as an expert demonstration for the artificial agent to imitate, we are able to speed up learning and improve overall task performance," Yang said. "Human demonstration knowledge allowed us to design our learning framework more meaningfully, which proves to be beneficial for motor skills and motor control in general."

The findings gathered by this team of researchers suggest that expert demonstrations, in this case footage of humans walking, can significantly enhance deep reinforcement learning techniques for training robots on different locomotion styles. Ultimately, the new framework they proposed could be used to train humanoid robots to walk in a similar way to humans faster and more efficiently, while also achieving more natural and human-like behaviors.

So far, the Yang and his colleagues only evaluated their framework in simulations, thus they now plan to investigate ways of transferring it from simulated environments to real world settings. They eventually would like to implement it on a real humanoid robot, in order to further assess its effectiveness and usability.

"In our future work, we also plan to extend the learning framework to imitate a more diverse and complex set of human motions, such as general motor skills across locomotion, manipulation and grasping," Yang said. "We also plan to research efficient simulation-to-reality policy transfer to enable fast deployment of the learned policies that adapt to real robots."

More information: Learning natural locomotion behaviors for humanoid robots using human knowledge. arXiv:2005.10195 [cs.RO]. arxiv.org/abs/2005.10195

Chuanyu Yang et al. Learning Natural Locomotion Behaviors for Humanoid Robots Using Human Bias, IEEE Robotics and Automation Letters (2020). DOI: 10.1109/LRA.2020.2972879

Daniel Holden et al. Phase-functioned neural networks for character control, ACM Transactions on Graphics (2017). DOI: 10.1145/3072959.3073663

He Zhang et al. Mode-adaptive neural networks for quadruped motion control, ACM Transactions on Graphics (2018). DOI: 10.1145/3197517.3201366

© 2020 Science X Network