June 27, 2023 report

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

preprint

trusted source

proofread

Rendering three-dimensional images from eye reflections with NeRF

Vision depends on light entering the eyes through the transparent tissues of the cornea, pupil and lens. When the light reaches the retina, photoreceptors produce signals and transmit them via the optical nerve to the brain, where an image is formed. Some of that light entering the eye is reflected back into the world by a highly reflective thin film of fluid covering the cornea.

Researchers at the University of Maryland were able to capture this reflected light and extract a three-dimensional model of the surroundings. In a paper on the pre-print server arXiv, titled "Seeing the World through Your Eyes," the team describes the methods used to capture the eye reflections and transform them into coherent 3D renderings using a specially trained AI visual rendering algorithm called NeRF.

A neural radiance field (NeRF) is an AI neural network that can generate novel continuous views of complex 3D scenes based on multiple 2D images. Typically with a few dozen still images at different angles, NeRF can generate a 3D representation with enough depth and detail to be almost indistinguishable from a video that can move around an object or space.

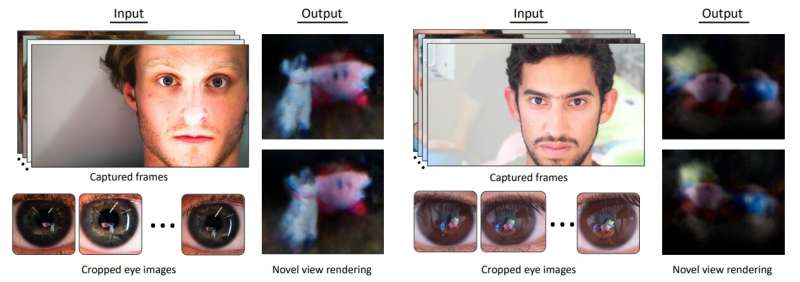

In the current effort by the Maryland team, they start with multiple images from a high-resolution camera in a fixed position, focused on an individual in motion looking towards the camera, framed much as a passport or driver's license photo might be. Zooming in on the reflection in the imaged person's eye, a mirror image of the field of view is visible, and objects in the area are identifiable.

Within the image are all sorts of artifacts of the eye, the complexity of iris textures, and the identifiable yet low-resolution reflections captured in each image. To remove the iris from the images, texture decomposition was performed by training a 2D texture map that learns the iris texture and deletes it.

Exploiting cornea geometry, which is approximately the same across all adults, computations were made to track exactly where their eyes are looking. This also allows the camera's angle to be determined, plotting the coordinates of images over the curved geometry and setting a viewing direction for the NeRF AI to use later to reconstruct the 3D rendering. Despite subtle inaccuracies in cornea location and geometry estimates, the method was effective in scene reconstruction.

Area lights placed by the person's sides (out of frame) were used to illuminate the object of interest in front of them. The imaged person was asked to move about within the camera's field of view as multiple images were captured.

In testing the method on a human eye, a very modest resolution rendering of the image is seen, but in a depth-mapped 3D rendering.

In a more idealized synthetic test utilizing a fake eye before a digital image, a more obvious image was achieved with improved resolution of the 3D mapping.

A third test applied the method to captured eye reflection images from music videos by Miley Cyrus and Lady Gaga in an attempt to reconstruct what they are observing while filming their videos.

The Miley Cyrus eye image appears to be of an LED grid light, which would be fitting as she is shedding a tear in the video and staring into a bright light might be helping to achieve the desired effect. In Lady Gaga's eye, there is what could be interpreted as a camera on a tripod, but the image is unclear.

In both music video scenarios tested, the rockstar subject is likely the only thing in the studio being well-lit, as the lights, camera and action are all about them. In a more mundane situation, like a Zoom call or a series of selfie posts, the lighting might be more conducive to gathering information on the surroundings.

More information: Hadi Alzayer et al, Seeing the World through Your Eyes, arXiv (2023). DOI: 10.48550/arxiv.2306.09348

Project page: world-from-eyes.github.io/

© 2023 Science X Network