July 7, 2023 feature

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

A new neural machine code to program reservoir computers

Reservoir computing is a promising computational framework based on recurrent neural networks (RNNs), which essentially maps input data onto a high-dimensional computational space, keeping some parameters of artificial neural networks (ANNs) fixed while updating others. This framework could help to improve the performance of machine learning algorithms, while also reducing the amount of data required to adequately train them.

RNNs essentially leverage recurrent connections between their different processing units to process sequential data and make accurate predictions. While RNNs have been found to perform well on numerous tasks, optimizing their performance by identifying parameters that are most relevant to the task they will be tackling can be challenging and time-consuming.

Jason Kim and Dani S. Bassett, two researchers at University of Pennsylvania, recently introduced an alternative approach to design and program RNN-based reservoir computers, which is inspired by how programming languages work on computer hardware. This approach, published in Nature Machine Intelligence, can identify the appropriate parameters for a given network, programming its computations to optimize its performance on target problems.

"Whether it's calculating a tip or simulating multiple moves in a game of chess, we have always been interested in how the brain represents and processes information," Kim told Tech Xplore. "We were inspired by the success of recurrent neural networks (RNNs) for both modeling brain dynamics and learning complex computations. Drawing on that inspiration, we asked a simple question: what if we could program RNNs the same way that we do computers? Prior work in control theory, dynamical systems, and physics told us that it was not an impossible dream."

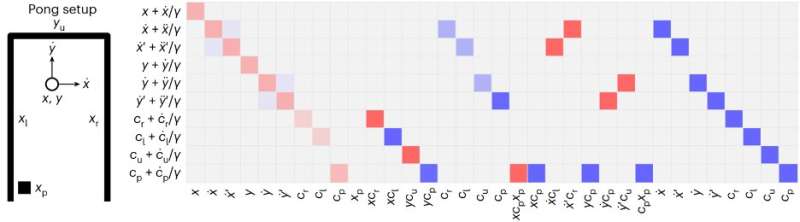

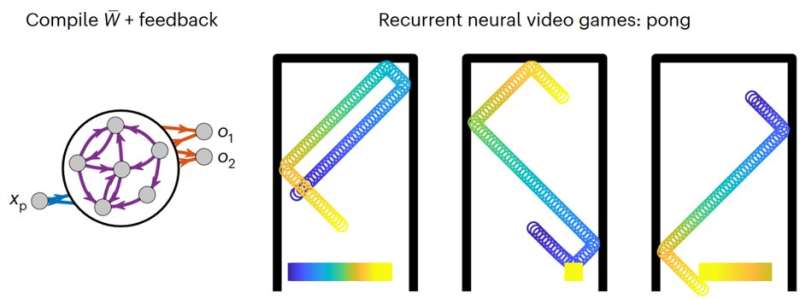

The neural machine code introduced by Kim and Bassett was attained by decompiling the internal representations and dynamics of RNNs to guide their analysis of input data. Their approach resembles the process of compiling an algorithm on computer hardware, which entails detailing the locations and timings at which individual transistors need to be turned on and off.

"In an RNN, these operations are specified simultaneously in the weights distributed across the network, and the neurons both run the operations in parallel and store the memory," Kim explained. "We use mathematics to define the set of operations (connection weights) that will run a desired algorithm (e.g., solving an equation, simulating a video game), and to extract the algorithm that is being run on an existing set of weights. The unique advantages of our approach are that it requires no data or sampling, and that it defines not just one connectivity, but a space of connectivity patterns that run the desired algorithm."

The researchers demonstrated the advantages of their framework by using it to develop RNNs for various applications, including virtual machines, logic gates and an AI-powered ping-pong videogame. These algorithms were all found to perform remarkably well, without requiring trial-and-error adjustments to their parameters.

"One notable contribution of our work is a paradigm shift in how we understand and study RNNs from data processing tools to fully-fledged computers" Kim said. "This shift means that we can examine a trained RNN and know what problem it is solving, and we can design RNNs to perform tasks without training data or backpropagation. Practically, we can initialize our networks with a hypothesis-driven algorithm rather than random weights or a pre-trained RNN, and we can directly extract the learned model from the RNN."

The programming framework and neural machine code introduced by this team of researchers could soon be used by other teams to design better performing RNNs and easily adjust their parameters. Kim and Bassett eventually hope to ultimately use their framework to create fully-fledged software that runs on neuromorphic hardware. In their next studies, they also plan to devise an approach to extract algorithms learned by trained reservoir computers.

"While neural networks are exceptional at processing complex and high-dimensional data, these networks tend to cost a lot of energy to run, and understanding what they have learned is exceptionally challenging," Kim said. "Our work provides a steppingstone for directly decompiling and translating the trained weights into an explicit algorithm that can be run much more efficiently without a need for the RNN, and further scrutinized for scientific understanding and performance."

Bassett's research group at University of Pennsylvania is also working on using machine learning approaches, particularly RNNs, to reproduce human mental processes and abilities. The neural machine code they recently created could support their efforts in this research area.

"A second exciting research direction is to design RNNs to perform tasks characteristic of human cognitive function," Dani S. Bassett, the Professor supervising the study, added. "Using theories, models, or data-derived definitions of cognitive processes, we envision designing RNNs to engage in attention, proprioception, and curiosity. In doing so, we are eager to understand the connectivity profiles that support such distinct cognitive processes."

More information: Jason Z. Kim et al, A neural machine code and programming framework for the reservoir computer, Nature Machine Intelligence (2023). DOI: 10.1038/s42256-023-00668-8

© 2023 Science X Network