This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

reputable news agency

proofread

AI models lack transparency: Research

Artificial intelligence models lack transparency, according to a study published Wednesday that aims to guide policymakers in regulating the rapidly-growing technology.

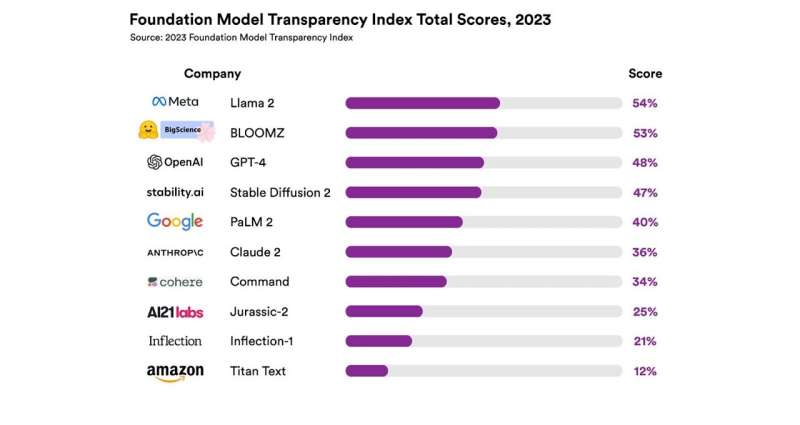

Stanford University researchers devised a "Foundation Model Transparency Index" that ranks 10 major AI firms.

The best score—54 percent—was given to Llama 2, the AI model launched by Facebook and Instagram owner Meta in July.

GPT-4, the flagship model of Microsoft-backed firm OpenAI, which created the renowned chatbot ChatGPT, was ranked third with a score of 48 percent.

Google's PaLM 2 is fifth at 40 percent, just above Claude 2 of Amazon-backed firm Anthropic at 36 percent.

Rishi Bommasani, a researcher at Stanford's Center for Research on Foundation Models, said companies should strive for a score of between 80 and 100 percent.

The researchers said "less transparency makes it harder" for "policymakers to design meaningful policies to rein in this powerful technology."

It also makes it harder for other business to know if they can rely on the technology for their own applications, for academics to do research and for consumers to understand the models' limitations, they said.

"If you don't have transparency, regulators can't even pose the right questions, let alone take action in these areas," Bommasani said.

The emergence of AI has generated both excitement about its technological promise and concerns about its potential impact on society.

The Stanford study said no company provides information about how many users depend on their model or on the geographic locations where they are used.

Most AI companies do not disclose how much copyrighted material is used in their models, the researchers said.

"For many policymakers in the EU as well as in the US, the UK, China, Canada, the G7, and a wide range of other governments, transparency is a major policy priority," Bommasani said.

The EU is leading the charge on regulating AI, and aims to green light what would be the world's first law covering the technology by the end of the year.

The Group of Seven called for action on AI during a summit in Japan earlier this year and Britain is hosting an international summit on the issue in November.

More information: Rishi Bommasani et al, The Foundation Model Transparency Index, arXiv (2023). DOI: 10.48550/arxiv.2310.12941 , doi.org/10.48550/arXiv.2310.12941

© 2023 AFP