This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

How human faces can teach androids to smile

Robots able to display human emotion have long been a mainstay of science fiction stories. Now, Japanese researchers have been studying the mechanical details of real human facial expressions to bring those stories closer to reality.

In a study published in the Mechanical Engineering Journal, a multi-institutional research team led by Osaka University have begun mapping out the intricacies of human facial movements. The researchers used 125 tracking markers attached to a person's face to closely examine 44 different, singular facial actions, such as blinking or raising the corner of the mouth.

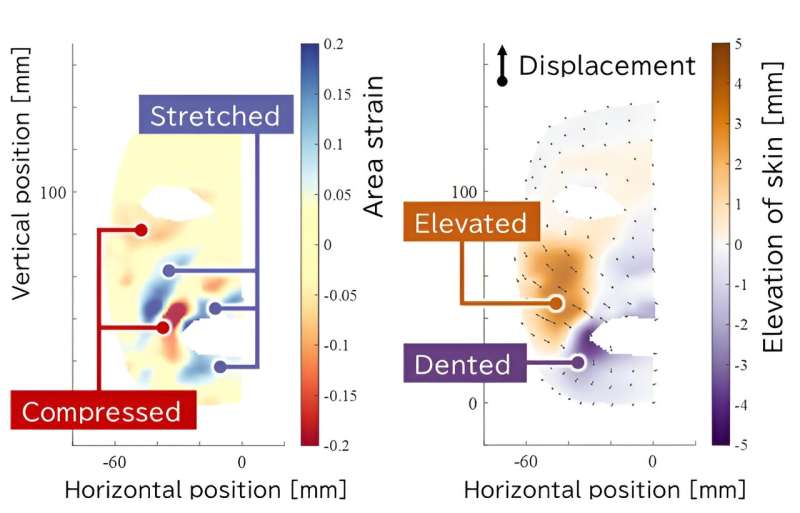

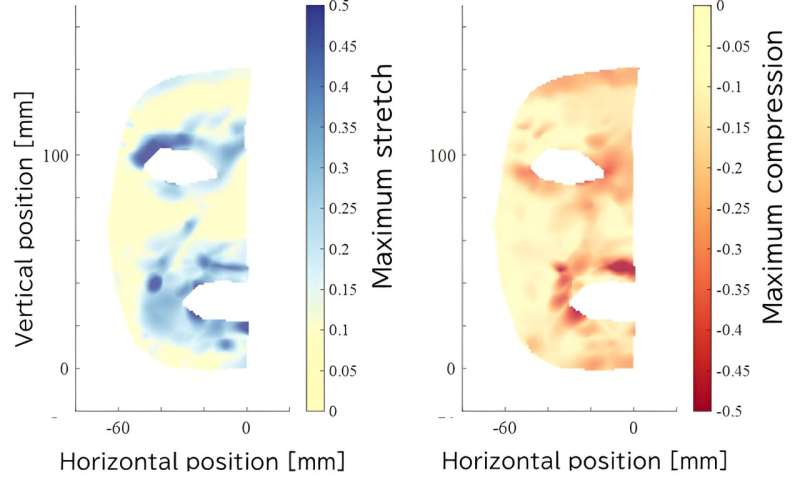

Every facial expression comes with a variety of local deformation as muscles stretch and compress the skin. Even the simplest motions can be surprisingly complex. Our faces contain a collection of different tissues below the skin, from muscle fibers to fatty adipose, all working in concert to convey how we're feeling. This includes everything from a big smile to a slight raise of the corner of the mouth. This level of detail is what makes facial expressions so subtle and nuanced, in turn making them challenging to replicate artificially.

Until now, this has relied on much simpler measurements, of the overall face shape and motion of points chosen on skin before and after movements.

"Our faces are so familiar to us that we don't notice the fine details," explains Hisashi Ishihara, main author of the study. "But from an engineering perspective, they are amazing information display devices. By looking at people's facial expressions, we can tell when a smile is hiding sadness, or whether someone's feeling tired or nervous."

Information gathered by this study can help researchers working with artificial faces, both created digitally on screens and, ultimately, the physical faces of android robots. Precise measurements of human faces, to understand all the tensions and compressions in facial structure, will allow these artificial expressions to appear both more accurate and natural.

"The facial structure beneath our skin is complex," says Akihiro Nakatani, senior author. "The deformation analysis in this study could explain how sophisticated expressions, which comprise both stretched and compressed skin, can result from deceivingly simple facial actions."

This work has applications beyond robotics as well, for example, improved facial recognition or medical diagnoses, the latter of which currently relies on doctor intuition to notice abnormalities in facial movement.

So far, this study has only examined the face of one person, but the researchers hope to use their work as a jumping off point to gain a fuller understanding of human facial motions. As well as helping robots to both recognize and convey emotion, this research could also help to improve facial movements in computer graphics, like those used in movies and video games, helping to avoid the dreaded 'uncanny valley' effect.

More information: Takeru MISU et al, Visualization and analysis of skin strain distribution in various human facial actions, Mechanical Engineering Journal (2023). DOI: 10.1299/mej.23-00189