This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

Altering our language can help us deal with the intelligence of chatbots

New research suggests reframing how we talk about and interact with large language models may help us adapt to their intelligence.

Changing how we think and talk about large language models like ChatGPT can help us cope with the strange, new sort of intelligence they have. This is according to a new paper from an Imperial College London researcher published in Nature.

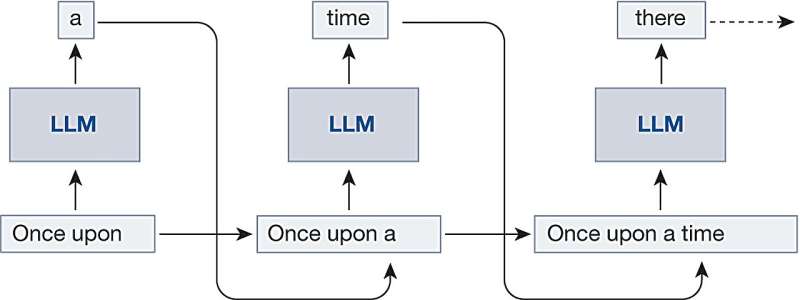

Such chatbots, which are underpinned by neural network-based large language models (LLMs), can induce a compelling sense that we are speaking with fellow humans rather than artificial intelligence.

Hardwired for sociability, human brains are built to connect and empathize with entities that are human-like. However, this can present problems for humans who interact with chatbots and other AI-based entities. Were these LLMs to be used by bad faith actors, for example scammers or propagandists, people could be vulnerable to handing over their bank details in pursuit of connection, or being swayed politically.

Now a new paper sets out recommendations to prevent us over-empathizing with AI chatbots to our detriment. Lead author Professor Murray Shanahan, from Imperial College London's Department of Computing said, "The way we refer to LLMs anthropomorphizes them to the extent that we risk treating them like kin. By saying LLMs 'understand' us, or 'think' or 'feel' in certain ways, we give them human qualities. Our social brains are always looking for connection, so there is a vulnerability here that we should protect."

To better understand what the researchers refer to as "exotic mind-like artifacts" requires a shift in the way we think about them, argue the researchers. This can be achieved by using two basic metaphors. First, taking a simple and intuitive view, we can see AI chatbots as actors role-playing a single character. Second, taking a more nuanced and technical view, we can see AI chatbots as maintaining a simulation of many possible roles, hedging their bets within a multiverse of possible characters.

Professor Shanahan said, "Both viewpoints have their advantages, which suggests the most effective strategy for thinking about such agents is not to cling to a single metaphor, but to shift freely between multiple metaphors."

More information: Murray Shanahan et al, Role play with large language models, Nature (2023). DOI: 10.1038/s41586-023-06647-8