December 10, 2023 feature

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

Using hierarchical generative models to enhance the motor control of autonomous robots

To best move in their surrounding environment and tackle everyday tasks, robots should be able to perform complex motions, effectively coordinating the movement of individual limbs. Roboticists and computer scientists have thus been trying to develop computational techniques that can artificially replicate the process through which humans plan, execute, and coordinate the movements of different body parts.

A research group based at Intel Labs (Germany), University College London (UCL, UK), and VERSES Research Lab (US) recently set out to explore the motor control of autonomous robots using hierarchical generative models, computational techniques that organize variables in data into different levels or hierarchies, to then mimic specific processes.

Their paper, published in Nature Machine Intelligence, demonstrates the effectiveness of these models for enabling human-inspired motor control in autonomous robots.

"Our recent paper explores how we can draw inspiration from biological intelligence to formalize robot learning and control," Zhibin (Alex) Li, corresponding author of the paper, told Tech Xplore.

"This allows for natural motion planning and precise control of a robot's movements within a coherent framework. We believe that the evolution of motor intelligence is not a random combination of different abilities. The structure of our vision cortex, language cortex, motor cortex, and so on, has a deeper and a structure-wise reason why such a mechanism for connecting different neural paths altogether can work effectively and efficiently."

The recent study by Assoc Prof Zhibin (Alex) Li and distinguished neuroscientist Prof Karl Friston FMedSci FRSB FRS draws inspiration from neuroscience research, specifically what is currently known about biological intelligence and motor control in humans. Using the human brain as a reference, the team developed software, machine learning and control algorithms that could improve the ability of autonomous smart robots to reliably complete complex daily tasks.

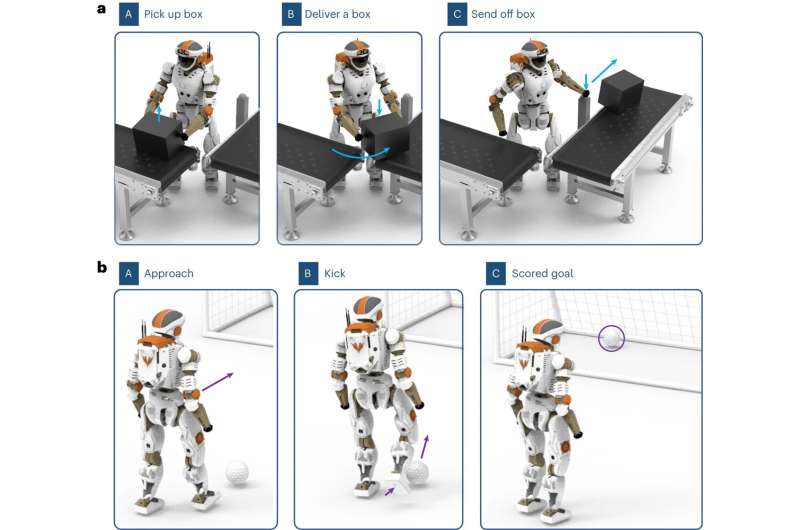

"In this paper, we have demonstrated this with our extensive simulation, where a full-body humanoid robot is able to transport boxes, open doors, operate facilities (e.g., conveyor belts) within a warehouse setting, play soccer, and even continue operation under physical damage to the robot body," Li said. "Our study demonstrates the power of nature where the inspiration of how different cortexes work together in our brain can help the design of smart robot brains."

Like other hierarchical generative models, the technique developed by Li and his colleagues works by organizing a task into different levels or hierarchies. Specifically, the team's model maps the overreaching goal of a task onto the execution of individual limb motions at different time scales.

"The generative models predict the consequences of different actions, thereby helping to solve different types/levels of planning and correctly mapping different robot actions, which is fairly hard and tedious to do," Li explained.

"For example, carrying a box from one place to another will naturally map to a global and coarse plan of walking towards the destination, together with more close monitoring and fine controlling of balance, as well as carrying the boxes and placing the boxes—all these complex coordination will happen naturally at the same time using our software."

The researchers evaluated their approach in a series of simulations and found that it allowed a humanoid robot to autonomously complete a complex task that entails a combination of actions, including walking, grasping objects, and manipulating them. Specifically, the robot could retrieve and transport a box while opening and walking through a door and kicking away a football.

"One of the most notable findings of our recent work is that taking inspiration from nature can be a very good starting point," Li said.

"We can get inspiration at the organizational level of resemblance of our brain and guide our design of the robot brain, rather than starting an engineering design from scratch. There is a fair amount of engineering work that have been invented independently from the bio-inspired approaches, and yet, we do not have intelligent robots yet that can do jobs smartly like us, using only little energy, such as consuming bread and water. Instead, nowadays, robots use enormous power and computing to do simple things."

The initial findings gathered by Li and his colleagues are highly promising, highlighting the potential of hierarchical generative models for transferring human capabilities to robots. Future experiments on a wide range of physical robots could help to further validate these results.

"At this point in human history, we have collectively done a huge amount of work to replicate different kinds of human-level intelligence separately that is equivalent to different parts of the human brain," Li added. "Now, we can draw inspiration from the biological brain in terms of structure and organizational level of functionalities regarding how different cortexes coordinate with each other. Then we can design an artificial brain based on how the human brain works at the functional level."

The recent work by this team of researchers contributes to ongoing efforts of Embodied AI aimed at bringing the capabilities of robots closer to those of humans. Li and his colleagues plan to continue implementing their proposed approach for real robot motor skills for complex tasks and maximizing its societal potential.

"This study leads us to a viable path towards building up AGI (artificial general intelligence) with embodied physical robots and abilities as a new form of productive forces that can bring our civilization towards a brighter future, under good and positive governance from the society and scientific communities," Li added. "In our next studies, we will continue working towards fulfilling this ambition."

More information: Kai Yuan et al, Hierarchical generative modelling for autonomous robots, Nature Machine Intelligence (2023). DOI: 10.1038/s42256-023-00752-z

© 2023 Science X Network