January 26, 2024 report

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

preprint

trusted source

proofread

Google announces the development of Lumiere, an AI-based next-generation text-to-video generator

A team of AI researchers at Google Research has developed a next-generation AI-based text-to-video generator called Lumiere. The group has published a paper describing their efforts on the arXiv preprint server.

Over the past few years, artificial intelligence applications have moved from the research lab to the user community at large—LLMs such as ChatGPT, for example, have been integrated with browsers, allowing users to generate text in unprecedented ways.

More recently, text-to-image generators have allowed users to create surreal imagery. And text-to-video generators have allowed users to generate short video clips using nothing but a few words. In this new effort, the team at Google has taken this last category to new heights with the announcement of a text-to-video generator called Lumiere.

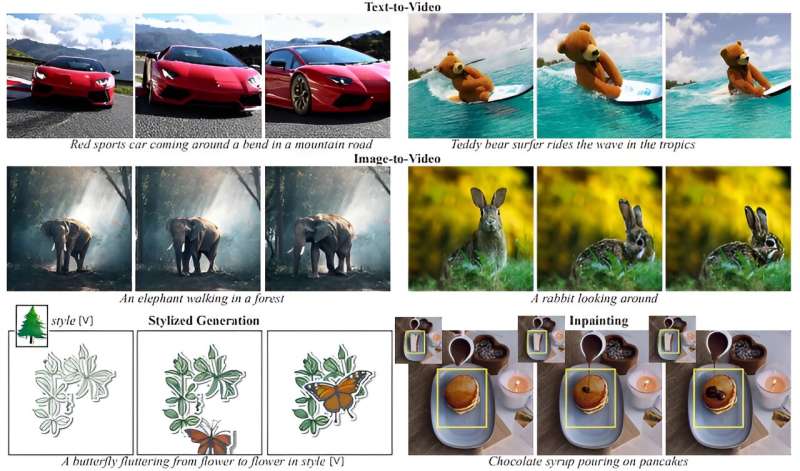

Lumiere, likely named after the Lumiere brothers who pioneered early photography equipment, allows users to type in a simple sentence such as "two raccoons reading books together" and get back a fully finished video showing two raccoons doing just that—and it does it in stunningly high resolution. The new generator represents a next step in the development of text-to-video generators by creating much better looking results.

Google describes the technology behind the new generator as a "groundbreaking Space-Time U-Net architecture." It was designed to generate animated video in a single model pass.

The demonstration video shows that Google added extra features, such as allowing users to edit an existing video by highlighting a part of it and typing instructions, such as "change dress color to red." The generator also produces different types of results, such as stylizations, where the style of a subject is created rather than a full-color representation. It also allows substyles, such as different style references. It also does cinemagraphics, in which a user can highlight part or all of a still image and have it animated.

In its announcement, Google did not specify if they plan to release or distribute Lumiere to the public, likely due to the obvious legal ramifications that could arise due to the potential creation of videos that violate copyright laws.

More information: Omer Bar-Tal et al, Lumiere: A Space-Time Diffusion Model for Video Generation, arXiv (2024). DOI: 10.48550/arxiv.2401.12945

© 2024 Science X Network