This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

Science fiction meets reality as researchers develop techniques to overcome obstructed views

After a recent car crash, John Murray-Bruce wished he could have seen the other car coming. The crash reaffirmed the USF assistant professor of computer science and engineering's mission to create a technology that could do just that: See around obstacles and ultimately expand one's line of vision.

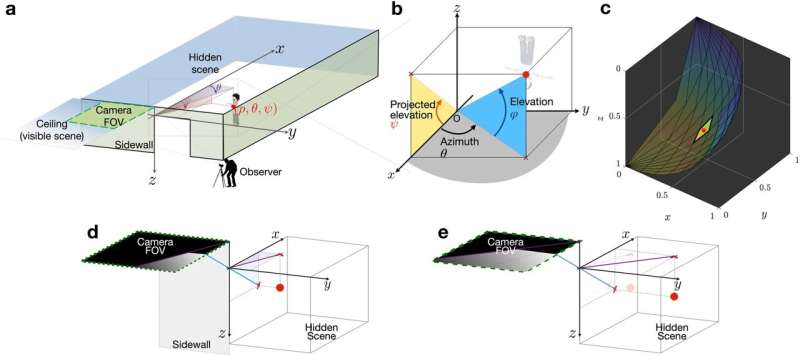

Using a single photograph, Murray-Bruce and his doctoral student, Robinson Czajkowski, created an algorithm that computes highly accurate, full-color three-dimensional reconstructions of areas behind obstacles—a concept that can not only help prevent car crashes but help law enforcement experts in hostage situations search-and-rescue and strategic military efforts.

"We're turning ordinary surfaces into mirrors to reveal regions, objects, and rooms that are outside our line of vision," Murray-Bruce said. "We live in a 3D world, so obtaining a more complete 3D picture of a scenario can be critical in a number of situations and applications."

As published in Nature Communications, Czajkowski and Murray-Bruce's research is the first of its kind to successfully reconstruct a hidden scene in 3D using an ordinary digital camera. The algorithm works by using information from the photo of faint shadows cast on nearby surfaces to create a high-quality reconstruction of the scene. While it is more technical for the average person, it could have broad applications.

"These shadows are all around us," Czajkowski said. "The fact we can't see them with our naked eye doesn't mean they're not there."

The idea of seeing around obstacles has been a topic of science-fiction movies and books for decades. Murray-Bruce says this research takes significant strides in bringing that concept to life.

Prior to this work, researchers have only used ordinary cameras to create rough 2D reconstructions of small spaces. The most successful demonstrations of 3D imaging of hidden scenes all required specialized, expensive equipment.

"Our work achieves a similar result using far less," Czajkowski said. "You don't need to spend a million dollars on equipment for this anymore."

Czajkowski and Murray-Bruce expect it will be 10 to 20 years before the technology is robust enough to be adopted by law enforcement and car manufacturers. Right now, they plan to continue their research to improve the technology's speed and accuracy further to expand its applications in the future, including self-driving cars to improve their safety and situational awareness.

"In just over a decade since the idea of seeing around corners emerged, there has been remarkable progress, and there is accelerating interest and research activity in the area," Murray-Bruce said. "This increased activity, along with access to better, more sensitive cameras and faster computing power, form the basis for my optimism on how soon this technology will become practical for a wide range of scenarios."

While the algorithm is still in the development phase, it is available for other researchers to test and reproduce in their own space.

More information: Robinson Czajkowski et al, Two-edge-resolved three-dimensional non-line-of-sight imaging with an ordinary camera, Nature Communications (2024). DOI: 10.1038/s41467-024-45397-7