March 8, 2024 feature

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

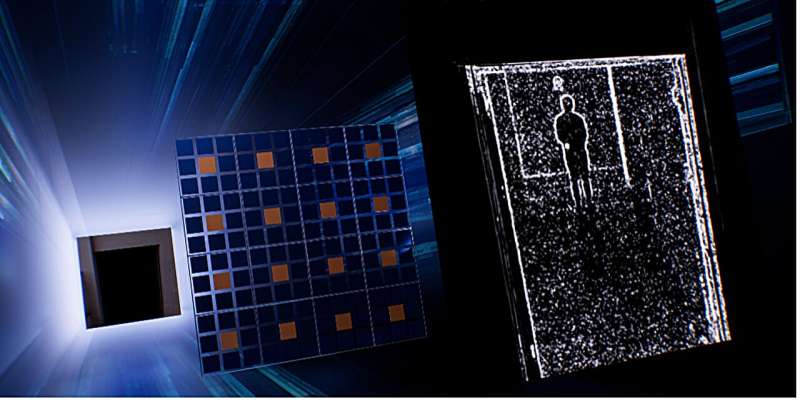

An approach to realize in-sensor dynamic computing and advance computer vision

The rapid advancement in machine learning techniques and sensing devices over the past decades have opened new possibilities for the detection and tracking of objects, animals, and people. The accurate and automated detection of visual targets, also known as intelligent machine vision, can have various applications, ranging from the enhancement of security and surveillance tools to environmental monitoring and the analysis of medical imaging data.

While machine vision tools have achieved highly promising results, their performance often declines in low lighting conditions or when there is limited visibility. To effectively detect and track dim targets, these tools should be able to reliably extract features such as edges and corners from images, which conventional sensors based on complementary metal-oxide-semiconductor (CMOS) technology are often unable to capture.

Researchers at Nanjing University and the Chinese Academy of Sciences recently introduced a new approach to develop sensors that could better detect dim targets in complex environments. Their approach, outlined in Nature Electronics, relies on the realization of in-sensor dynamic computing, thus merging sensing and processing capabilities into a single device.

"In low-contrast optical environments, intelligent perception of weak targets has always faced severe challenges in terms of low accuracy and poor robustness," Shi-Jun Liang, senior author of the paper, told Tech Xplore. "This is mainly due to the small intensity difference between the target and background light signals, with the target signal almost submerged in background noise."

Conventional techniques for the static pixel-independent photoelectric detection of targets in images rely on sensors based on CMOS technology. While some of these techniques have performed better than others, they often cannot accurately distinguish target signals from background signals.

In recent years, computer scientists have thus been trying to devise new principles for the development of hardware based on low-dimensional materials created using mature growth and processing techniques, which are also compatible with conventional silicon-based CMOS technology. The key goal of these research efforts is to achieve higher robustness and precision in low-contrast optical environments.

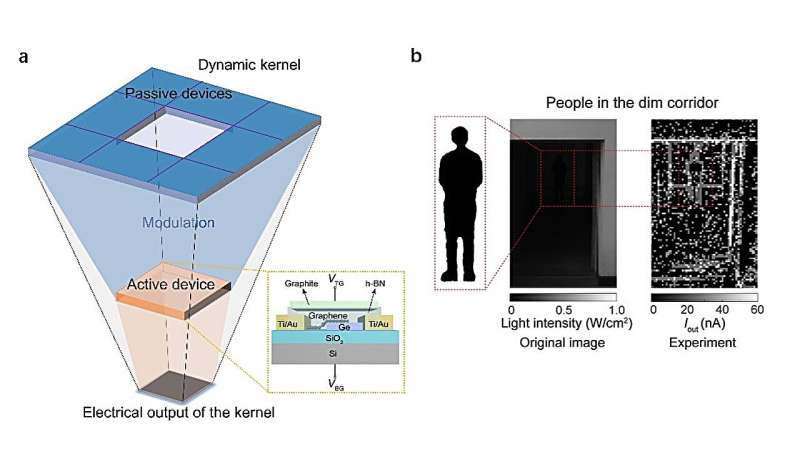

"We have been working on the technology of in-sensor computing and published a few interesting works about optoelectronic convolutional processing, which is essentially based on static processing," Liang explained. "We asked ourselves whether we could introduce the dynamic control into the in-sensor optoelectronic computing technology to enhance the computation capability of the sensor. Building on this idea, we proposed the concept of in-sensor dynamic computing by operating the neighboring pixels in a correlated manner and demonstrated its promising application in complex lighting environments."

In their recent paper, Feng Miao, Liang and their colleagues introduced a new in-sensor dynamic computing approach designed to detect and track dim targets under unfavorable lighting conditions. This approach relies on multi-terminal photoelectric devices based on graphene/germanium mixed-dimensional heterostructures, which are combined to create a single sensor.

"By dynamically controlling the correlation strength between adjacent devices in the optoelectronic sensor, we can realize dynamic modulation of convolution kernel weights based on local image intensity gradients and implement in-sensor dynamic computing units adaptable to image content," Miao said.

"Unlike the conventional sensor where the devices are independently operated, the devices in our in-sensor dynamic computing technology are correlated to detect the and track the dim targets, which enables for ultra-accurate and robust recognition of contrast-varying targets in complex lighting environments."

Miao, Liang and their colleagues are the first to introduce an in-sensor computing paradigm that relies on the dynamic feedback control between interconnected and neighboring optoelectronic devices based on multi-terminal mixed-dimensional heterostructures. Initial tests found that their proposed approach is highly promising, as it enabled the robust tracking of dim tracking under unfavorable lighting conditions.

"Compared with conventional optoelectronic convolution whose kernel weights are constant regardless of optical image inputs, the weights of our 'dynamic kernel' are correlated to local image content, enabling the sensor more flexible, adaptable and intelligent," Miao said. "The dynamic control and correlated programming also allow convolutional neural network backpropagation approaches to be incorporated into the frontend sensor."

Notably, the devices that Miao, Liang and their colleagues used to implement their approach are based on graphene and germanium, two materials that are compatible with conventional CMOS technology and can easily be produced on a large scale. In the future, the researchers' approach could be evaluated in various real-world settings, to further validate its potential.

"The next step for this research will be to validate the scalability of in-sensor dynamic computing through large-scale on-chip integration, and many engineering and technical issues still need to be resolved," Liang added.

"Extending the detection wavelength to near-infrared or even mid-infrared bands is another future research direction, which will broaden the applicability to various low-contrast scenarios such as remote sensing, medical imaging, monitoring, security and early-warning in low visibility meteorological conditions."

More information: Yuekun Yang et al, In-sensor dynamic computing for intelligent machine vision, Nature Electronics (2024). DOI: 10.1038/s41928-024-01124-0

© 2024 Science X Network