This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

How drivers and cars understand each other

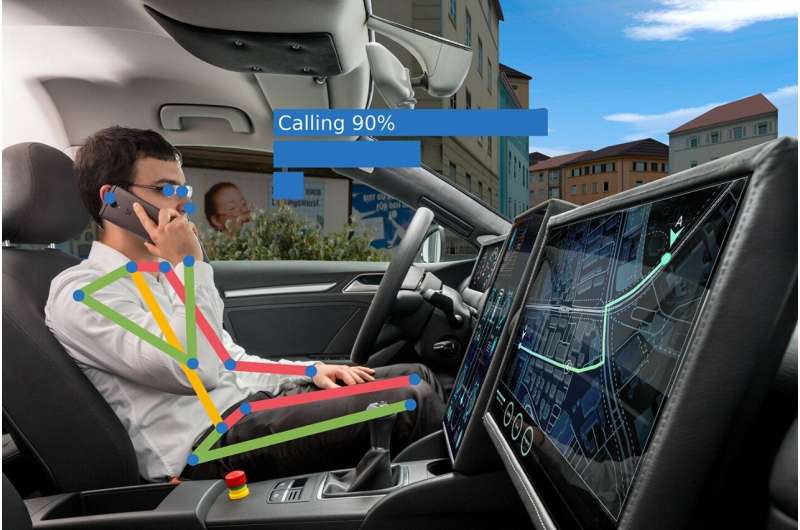

Optimizing communication between vehicle and driver as a function of the degree of automation is the objective of a research project conducted by Fraunhofer in collaboration with other companies. The researchers are combining sensors for monitoring the vehicle interior with language models to form what are known as vision language models. They are designed to increase the convenience and safety of cars in the future.

"Warning, if you keep reading now, you may become nauseous on the winding stretch of road. In five minutes, we'll be on the highway, and it will be easier." Or: "It's about to rain and we need to switch off automated driving. Please get ready to drive on your own for a while. I'm sorry, but you'll need to stow your laptop in a safe place for now. Safety first." In a couple of years, cars could be communicating with drivers in a way very similar to this.

With the increasing automation of vehicles, the way they interact with humans needs to be rethought. A research team from the Fraunhofer Institutes for Optronics, System Technologies and Image Exploitation IOSB and for Industrial Engineering IAO has taken up this task together with ten partners, including Continental, Ford and Audi, as well as a series of medium-sized enterprises and universities, in the KARLI project. KARLI is a German acronym that stands for "Artificial Intelligence for Adaptive, Responsive and Level-compliant Interaction" in vehicles of the future.

Today, we distinguish between six different levels of automation: not automated (0), assisted (1), partially automated (2), highly automated (3), fully automated (4) and autonomous (5). "In the KARLI project, we are developing AI functions for automation levels two to four. To do this, we record what the driver is doing and design different human-machine interactions that are typical for each level," explains Frederik Diederichs, project coordinator at the Fraunhofer Institute for Optronics, System Technologies and Image Exploitation IOSB in Karlsruhe.

Interaction at different levels

Depending on the level of automation, drivers either need to focus on the road or they can focus on other things. They have ten seconds to take the wheel again, or in some cases they do not need to intervene again at all. These differing user requirements and the ability to change between the different levels depending on the road situation make it a complex task to define and design suitable interactions for each level. In addition, the interaction and design must ensure that drivers are always aware of the current level of automation so that they can perform their role correctly.

The applications developed in the KARLI project have three main focus points: First, warnings and information should encourage level-compliant behavior and, for example, prevent the driver from being distracted in a moment where they need to be paying attention to the road.

The communication to the user is therefore adapted to each level—it may be visual, acoustic, haptic or a combination of the three. The interaction is controlled by AI agents, whose performance and reliability are being evaluated by the partners.

Secondly, the risk of motion sickness—one of the biggest problems with passive driving—needs to be anticipated and minimized. Between 20% and 50% of people suffer from motion sickness.

"By matching the occupants' activities with predictable accelerations on winding stretches of road, AI can address the right passengers at the right time so they can prevent motion sickness, giving them tips tailored to their current activities. We do this by using what are known as generated user interfaces, 'GenUIn' for short, to tailor the interaction between humans and AI," explains Diederichs.

This AI interaction is the third application in the KARLI project. GenUIn generates individually targeted output, providing information on how to reduce motion sickness if it arises, for example. These tips may be related to the current activity, which is recorded by sensors, but they also take into account the options that are available in the current context.

Users can also personalize the entire interaction in the vehicle and adapt it to their needs progressively over time. The automation level is always taken into account in the interaction: For example, the information may be brief and purely verbal if the driver is focusing on the road, or it may be more detailed and presented through visual channels if the vehicle is currently doing the driving.

Various AI-supported sensors record the activities in the car, with the key elements being optical sensors in the interior cameras. Current legislation for autonomous driving is making these mandatory in any case in order to ensure that the driver is capable of driving.

The researchers then combine the visual data from the cameras with large language models to form what are known as vision language models (VLMs). These allow modern driver assistance systems in (partially) autonomous vehicles to record situations inside the vehicle semantically and to respond to those situations.

Diederichs compares the interaction in the vehicle of the future to a butler who stays in the background but understands the context and offers the best possible support to the vehicle's occupants.

Anonymization and data protection

"Crucial factors for the acceptance of these systems include trust in the service provider, data security and a direct benefit for drivers," says Frederik Diederichs. This means that the best possible anonymization and data security as well as transparent and explainable data collection are crucial.

"Not everything that is in a camera's field of view is evaluated. The information that a sensor is recording and what it's used for must be transparent. We are researching how to ensure this in our working group Xplainable AI at Fraunhofer IOSB."

In another project (Anymos), Fraunhofer researchers are working on anonymizing camera data, processing it in a way that minimizes data use, and protecting it effectively.

Data efficiency with Small2BigData

Another unique selling point of the research project is data efficiency. "Our Small2BigData approach only requires a small amount of high-quality AI training data, which is empirically collected and synthetically generated. It forms the basis for car manufacturers to know what data to collect later during serial operation so that the system can be used.

"This keeps the volume of data required to a manageable level and makes the results of the project scalable," explains Diederichs.

Just recently, Diederichs and his team put a mobile research laboratory based on a Mercedes EQS into operation in order to learn more about user needs in automated driving at level 3 on the road. Here, the findings from the KARLI project are being tested and evaluated in practice. This will enable the first features to be made available in mass-produced vehicles as soon as 2026.

"German manufacturers are in tough competition with their international counterparts when it comes to automated driving, and they will only succeed if they can competitively improve the user experience in the car and address and tailor it to user requirements with AI," the expert says. "The results of our project have an important part to play in this."