May 25, 2020 feature

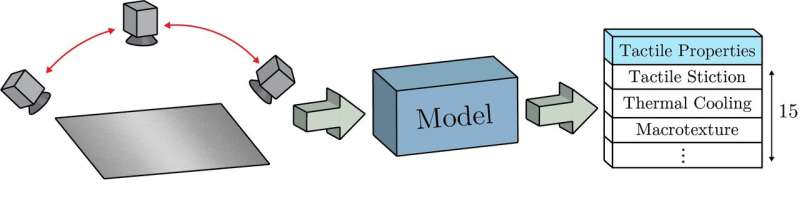

A model that estimates tactile properties of surfaces by analyzing images

The ability to estimate the physical properties of objects is of key importance for robots, as it allows them to interact more effectively with their surrounding environment. In recent years, many robotics researchers have been specifically trying to develop techniques that allow robots to estimate tactile properties of objects or surfaces, which could ultimately provide them with skills that resemble the human sense of touch.

Building on previous research, Matthew Purri, a Ph.D. student specializing in Computer Vision and AI at Rutgers University, recently developed a convolutional neural network (CNN)-based model that can estimate tactile properties of surfaces by analyzing images of them. Purri's new paper, pre-published on arXiv, was supervised by Kristin Dana, a professor of Electrical Engineering at Rutgers.

"My previous research dealt with fine-grain material segmentation from satellite images," Purri told TechXplore. "Satellite image sequences provide a wealth of material information about a scene in the form of varied viewing and illumination angles and multispectral information. We learned how valuable multi-view information is for identifying material from our previous work and believed that this information could act as a cue for the problem of physical surface property estimation."

Dana and other researchers at Rutgers had previously tried to develop a technique to estimate the coefficient of the friction of surfaces from reflectance disk images; a specific type of image that show how much surfaces or materials are able to reflect radiant energy. In his paper, Purri set out to develop this method further so that it could estimate more physical properties from RGB images.

"The objective of this new project was to estimate numerous physical properties of a surface, such as friction and compliance, from visual information alone," Purri explained. "We worked together with SynTouch, a company that created a tactile sensor dubbed Toccare, which measures a variety of tactile physical properties of a surface. In our arXiv paper, we explore the possibility of estimating these properties from a single image and from multiple images."

An additional question that Purri and Dana explored in their research is whether the angle from which different input images were taken had an effect on how well their neural network could estimate a surface's physical properties. Instead of manually selecting different viewing angles, however, the researchers devised a model that can automatically learn optimal viewing angle combinations, as well as ideal neural network parameters.

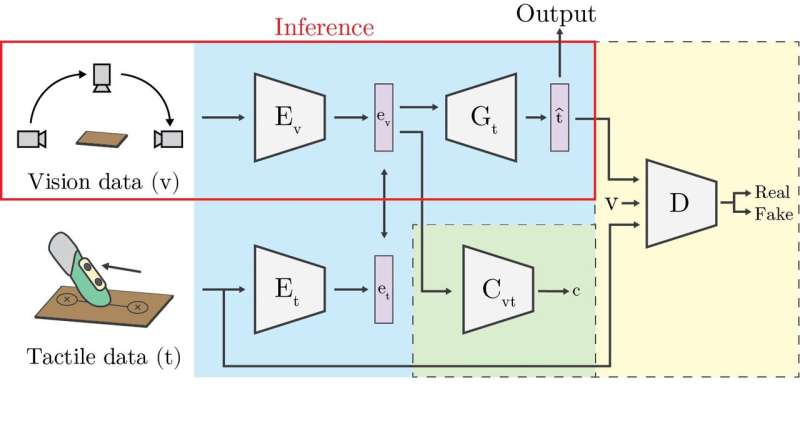

"One goal of our model is to learn a function that separately projects images of a surface (visual information) and tactile physical property information into a shared subspace, where pairs of visuo-tactile information are close and dissimilar visuo-tactile pairs are far apart," Purri said. "To achieve this goal, the model is penalized if separately projected visuo-tactile pairs are far apart in the subspace."

The technique devised by Purri and Dana also tries to identify visuo-tactile pairs that have similar visual and tactile properties to other pairs, following an auxiliary classification objective. It then generates new classification labels through a process known as visuo-tactile feature clustering.

"Another objective of our model is to estimate physical properties from visual information," Purri said. "A jointly learned function receives projected visual information and estimates one or several physical properties. We increased the estimation performance by including an adversarial objective to this section of the model. The physical property estimate combined with the input visual information essentially tricks a discriminator function into thinking it was an authentic physical property value."

Purri and Dana evaluated their CNN-based model for estimating physical properties of surfaces in a series of experiments and found that it performed remarkably well. In fact, just by analyzing images of surfaces, their model was able to identify many of their physical properties.

The new model devised by the researchers could have a number of interesting applications. Firstly, it could allow robotic systems to better understand the key characteristics of objects and surfaces in their surroundings, allowing them to interact with them more efficiently and navigate new environments with greater ease.

In addition, the researchers introduced a method to automatically calculate optimal combinations of image angles for training models to estimate physical properties of objects. In the future, the optimal combinations they identified could inform the design of sensors that are tailored for specific tasks, such as quality control in factories.

"In the first phase of our research we have shown how well physical properties can be learned from visual information," Purri added. "Our next objective will be to use the insight we gained to improve model performance on tasks that involve precise object manipulation."

More information: Teaching cameras to feel: Estimating tactile physical properties of surfaces from images. arXiv:2004.14487 [cs.CV]. arxiv.org/abs/2004.14487

© 2020 Science X Network