February 3, 2022 report

DeepMind's AI programming tool AlphaCode tests in top 54% of human coders

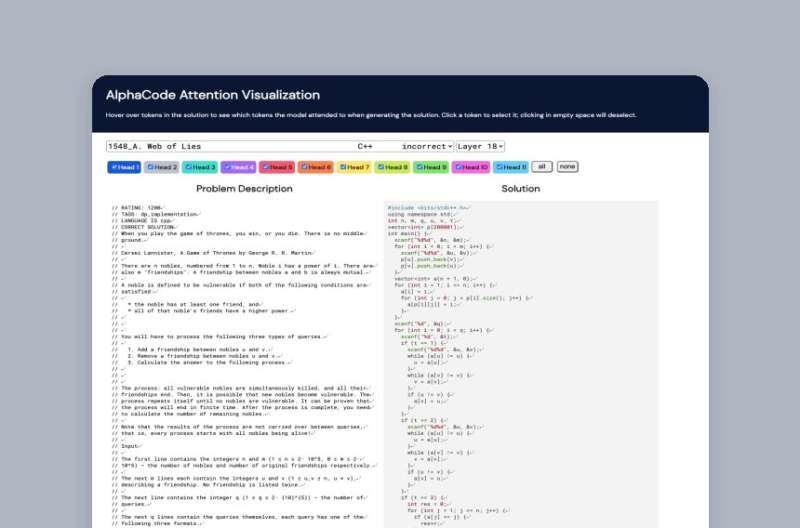

The team at DeepMind has tested the programming skills of its AI programming tool AlphaCode against human programmer competitors and has found it tested in the top 54 percent of human coders. In their preprint article, the group at DeepMind suggests that its programming application has opened the door to future tools that could make programming easier and more accessible. The team has also posted a page on its blog site outlining the progress being made with AlphaCode.

Research teams have been working steadily over the past several years to apply artificial intelligence to computer programming. The goal is to create AI systems that are capable of writing code for computer applications that are more sophisticated than those currently created by human coders. Barring that, many have noted that if computers were writing code, computer programming would become a much less costly endeavor. Thus far, most such efforts have been met with limited success, however, because they lack the intelligence needed to carry out the most difficult part of programming—the approach.

When a programmer is asked to write a program that will perform a certain function, that programmer has to first figure out how such a problem might be solved. As an example, if the task is to solve any maze of a certain size, the programmer can take a brute-force approach or apply techniques such as recursion. The programmer makes a choice based on both real-world knowledge and lessons learned through experience. AI programs typically have little of either, and they also lack the sort of intelligence that humans possess. But it appears researchers are getting closer. DeepMind's AlphaCode is an AI system that is able to create code within the confines of a programming competition—a setting where simple problems are outlined and code is written within a few hours.

The team at DeepMind tested their new tool against humans competing on Codeforces, a site that hosts programming challenges. Those that compete are rated on both their approach and their skills. AlphaCode took on 10 challenges with no assistance from human handlers. It had to read the outline that described what was to be done, develop an approach, and then write the code. After judging, AlphaCode was ranked in the top 54.3 percent of programmers who had taken the same challenges. DeepMind notes that this ranking puts the system in the top 28 percent of programmers who have competed in any event on the site over the prior six months.

More information: Deepmind: deepmind.com/blog/article/Comp … mming-with-AlphaCode

Preprint: storage.googleapis.com/deepmin … n_with_alphacode.pdf

© 2022 Science X Network