January 18, 2023 report

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

preprint

trusted source

proofread

Using a deep neural network to improve virtual images of people created using WiFi signals

A trio of researchers at Carnegie Mellon University has taken the use of WiFi signals to identify people in a building to a new level, through the use of a deep neural network. Jiaqi Geng, Dong Huang and Fernando De la Torre suggest, in a paper they have posted to the arXiv preprint server, that their approach allows for creating images on par with RGB cameras.

Back in 2013, a team of engineers at MIT found that WiFi signals could be used to detect the presence of a person in a building. They noted that by mapping the signals over time, they could see where the signals were being blocked by a person's body. By continuing the process over the next few years, they found that they were able to create stick figures that showed where a person was in a given building at any given time.

The process is now known as DensePose. In this new effort, the research trio have taken this approach to a new level by introducing a neural network that helps fill in the bodies of the stick figures, providing much more lifelike images—and it can do it on the fly, allowing for real-time motion tracking of multiple people in a given area.

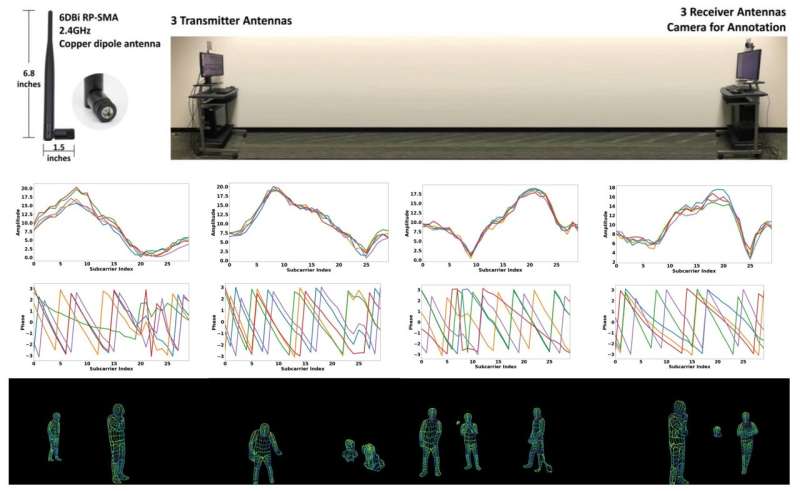

The work by the team involved placing three WiFi transmitters along with three aligned receivers at a scene—indoors in a room, or outside at a chosen site—along with a computer for processing and display. They note that the WiFi equipment used in their experiments cost just US $30, far less than LiDAR or radar systems.

When running, the WiFi signals are picked up by the receivers which send them to a GPU inside of a computer for processing. The processing involves using a neural network to map the amplitude and phase of the signals to coordinates onto a virtually created human body—a process known as dense human pose correspondence.

During the process, the virtual human body is broken down into 24 components where two-dimensional texture coordinates are mapped onto them based on WiFi signals. The body parts are then put back together where they resemble a realistic human form—all in real time. The result is a virtual animation shown on the computer display that mimics the locations and actions of people in the original scene.

More information: Jiaqi Geng et al, DensePose From WiFi, arXiv (2023). DOI: 10.48550/arxiv.2301.00250

© 2023 Science X Network