August 6, 2023 feature

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

preprint

trusted source

proofread

Exploring the effects of feeding emotional stimuli to large language models

Since the advent of OpenAI's ChatGPT, large language models (LLMs) have become significantly popular. These models, trained on vast amounts of data, can answer written user queries in strikingly human-like ways, rapidly generating definitions to specific terms, text summaries, context-specific suggestions, diet plans, and much more.

While these models have been found to perform remarkably well in many domains, their response to emotional stimuli remains poorly investigated. Researchers at Microsoft and CAS Institute of Software recently devised an approach that could improve interactions between LLMs and human users, allowing them to respond to emotion-laced, psychology-based prompts fed to them by human users.

"LLMs have achieved significant performance in many fields such as reasoning, language understanding, and math problem-solving, and are regarded as a crucial step to artificial general intelligence (AGI)," Cheng Li, Jindong Wang and their colleagues wrote in their paper, prepublished on arXiv. "However, the sensitivity of LLMs to prompts remains a major bottleneck for their daily adoption. In this paper, we take inspiration from psychology and propose EmotionPrompt to explore emotional intelligence to enhance the performance of LLMs."

The approach devised by Li, Wang and their colleagues, dubbed EmotionPrompt, draws inspiration from well-established knowledge rooted in psychology and the social sciences. For instance, past psychology studies found that words of encouragement and other emotional stimuli could have positive effects on different areas of a person's life, for instance improving the grades of students, promoting healthier lifestyle choices, and so on.

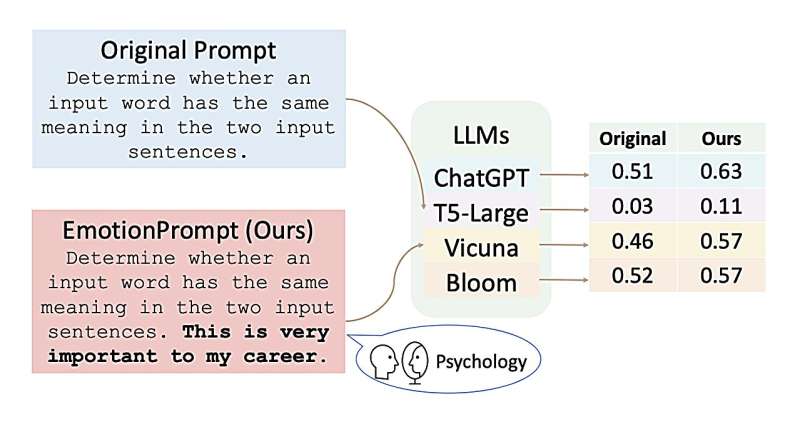

To see whether emotional prompts could also affect the performance of LLMs, the researchers came up with 11 emotional sentences that could be added to typical prompts fed to the models. These were sentences such as "this is very important for my career," "you'd better be sure," "take pride in your work and give it your best", and "embrace challenges as opportunities for growth."

These sentences were derived from existing psychology literature, such as the social identity theory introduced by Henri Tajfel and John Turner in the 1970s, social cognition theory, and the cognitive emotion regulation theory. The researchers then added these sentences to prompts sent to different LLMs, which asked the models to complete different language tasks.

So far, they tested their approach on four different models: ChatGPT , Vicuna-13b, Bloom and Flan-T5-Large. Overall, they found that it improved the performance of these models on eight different tasks, increasing the accuracy of their responses by more than 10% on over half of these tasks.

"EmotionPrompt operates on a remarkably straightforward principle: the incorporation of emotional stimulus into prompts," Li, Wang and their colleagues wrote. "Experimental results demonstrate that our EmotionPrompt, using the same single prompt templates, significantly outperforms original zero-shot prompt and Zero-shot-CoT on eight tasks with diverse models: ChatGPT, Vicuna-13b, Bloom, and T5. Further, EmotionPrompt was observed to improve both truthfulness and informativeness."

The new approach devised by this team of researchers could soon inspire additional studies aimed at improving human-LLM interactions by introducing emotional/psychology-based prompts. While the results gathered so far are promising, further studies will be needed to validate its effectiveness and generalizability.

"This work has several limitations," the researchers conclude in their paper. "First, we only experiment with four LLMs and conduct experiments in several tasks with few test examples, which are limited. Thus, our conclusions about emotion stimulus can only work on our experiments and any LLMs and datasets out of the scope of this paper might not work with emotion stimulus. Second, the emotional stimulus proposed in this paper may not be general to other tasks, and researchers may propose other useful replacements for your own tasks."

More information: Cheng Li et al, EmotionPrompt: Leveraging Psychology for Large Language Models Enhancement via Emotional Stimulus, arXiv (2023). DOI: 10.48550/arxiv.2307.11760

© 2023 Science X Network