September 21, 2023 report

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

preprint

trusted source

proofread

With encouragement, large language models devise more efficient prompts

One of the principal drivers of efficient large language model (LLM) tasks is the prompt.

To be most effective, a prompt must be clear and well-tailored to the task.

Researchers devote significant resources to ensure that prompts are optimized to yield the best outcome. Improper use of keywords, awkward phrasing, vague instructions or lack of appropriate context can degrade the quality of results.

Computer programmers are always trying to craft better ways to formulate prompts. Researchers at Google's DeepMind recently considered a novel approach: What if large language models helped construct prompts?

They came up with a process called OPRO, Optimization by PROmpting.

In a paper, published Sept. 7 on the pre-print server arXiv, DeepMind researcher Chengrun Yang explained OPRO is "a simple and effective approach" to assign optimization tasks to LLMs in natural language.

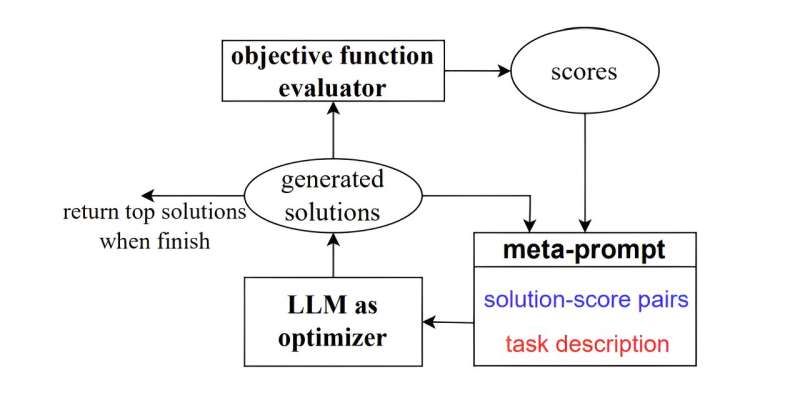

"In each optimization step," Yang said, "the LLM generates new solutions from the prompt that contains previously generated solutions with their values, then the new solutions are evaluated and added to the prompt for the next optimization step."

Such iterative solutions are commonly used in optimization tasks, but the formulation has generally been devised by humans relying heavily on mathematical models.

OPRO capitalizes on LLMs' novel ability to understand natural language instructions.

It creates prompts, clearly defining the challenge, and provides examples of similar problems and instructions for an iterative approach to a solution. That is, as the LLM proposes solutions for each step in the optimization process, the prompt is modified to incorporate those results. The process is repeated until an optimal solution is reached.

"Optimization with LLMs enables quick adaptation to different tasks by changing the problem description in the prompt, and the optimization process can be customized by adding instructions to specify the desired properties of the solutions," said Yang.

The researchers tested their approach on two popular types of challenges: linear regression and the traveling salesman problem. The results were promising, but with an added touch—they found significant improvement.

The linear approach is a statistical model displaying a relationship between text-based and numeric variables. It can be used in financial forecasting, for example, by predicting stock prices based on news reports from Wall Street, or it can recommend Netflix movies based on a user's reviews of programming.

The traveling salesman scenario is a classic optimization problem that provides a list of cities and then determines the shortest and fastest route a salesman would need to take to visit each city without repeat.

OPRO performed admirably. It achieved results "on par with some hand-crafted heuristic algorithms," Yang said.

"But with an extra boost, optimized prompts outperform[ed] human-designed prompts … by a significant margin, sometimes over 50%," Yang said.

What was the extra boost?

Encouragement.

The DeepMind team discovered that when phrases expressing encouragement were attached to prompts, better results were achieved.

Such phrases included, "Take a deep breath and work on this problem step-by-step," "Let's work this out in a step-by-step way to be sure we have the right answer," and "Let's calculate our way to the solution."

The researchers did not elaborate on why such supportive expressions yielded better results, though it may be assumed LLMs were trained on data containing numerous instances of the expressions associated with careful examination and processing of relevant data.

"Optimization is ubiquitous," Yang said. "While derivative-based algorithms have been powerful tools for various problems, the absence of gradient imposes challenges on many real-world applications… With the advancement of prompting techniques, LLMs have achieved impressive performance on a variety of domains."

More information: Chengrun Yang et al, Large Language Models as Optimizers, arXiv (2023). DOI: 10.48550/arxiv.2309.03409

© 2023 Science X Network