This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

preprint

trusted source

proofread

AI-powered 'sonar' on smartglasses tracks gaze, facial expressions

Cornell University researchers have developed two technologies that track a person's gaze and facial expressions through sonar-like sensing. The technology is small enough to fit on commercial smartglasses or virtual reality or augmented reality headsets yet consumes significantly less power than similar tools using cameras.

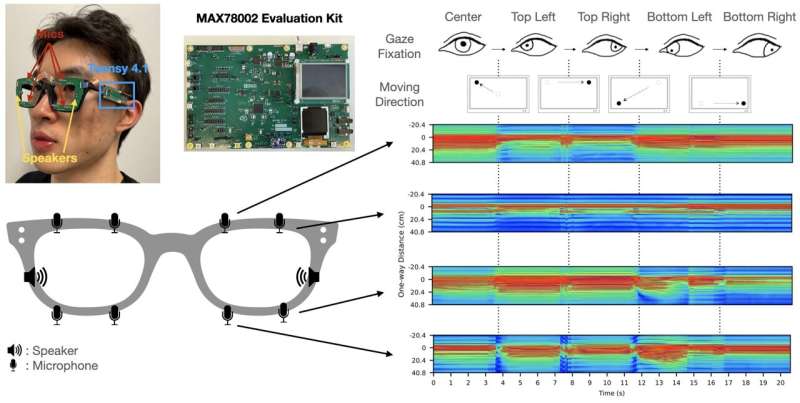

Both use speakers and microphones mounted on an eyeglass frame to bounce inaudible soundwaves off the face and pick up reflected signals caused by face and eye movements. One device, GazeTrak, is the first eye-tracking system that relies on acoustic signals. The second, EyeEcho, is the first eyeglass-based system to continuously and accurately detect facial expressions and recreate them through an avatar in real-time.

The devices can last for several hours on a smartglasses battery and more than a day on a VR headset.

"It's small, it's cheap and super low-powered, so you can wear it on smartglasses every day—it won't kill your battery," said Cheng Zhang, assistant professor of information science. Zhang directs the Smart Computer Interfaces for Future Interactions (SciFi) Lab that created the new devices.

"In a VR environment, you want to recreate detailed facial expressions and gaze movements so that you can have better interactions with other users," said Ke Li, a doctoral student who led the GazeTrak and EyeEcho development.

For GazeTrak, researchers positioned one speaker and four microphones around the inside of each eye frame of a pair of glasses to bounce and pick up soundwaves from the eyeball and the area around the eyes. The resulting sound signals are fed into a customized deep-learning pipeline that uses artificial intelligence to infer the direction of the person's gaze continuously.

For EyeEcho, one speaker and one microphone are located next to the glasses' hinges, pointing down to catch skin movement as facial expressions change. The reflected signals are also interpreted using AI.

With this technology, users can have hands-free video calls through an avatar, even in a noisy café or on the street. While some smartglasses have the ability to recognize faces or distinguish between a few specific expressions, currently, none track expressions continuously like EyeEcho.

These two advances have applications beyond enhancing a person's VR experience. GazeTrak could be used with screen readers to read out portions of text for people with low vision as they peruse a website.

GazeTrak and EyeEcho could also potentially help diagnose or monitor neurodegenerative diseases, like Alzheimer's and Parkinsons. With these conditions, patients often have abnormal eye movements and less expressive faces, and this type of technology could track the progression of the disease from the comfort of a patient's home.

Li will present GazeTrak at the Annual International Conference on Mobile Computing and Networking in the fall and EyeEcho at the Association of Computing Machinery CHI conference on Human Factors in Computing Systems in May.

The findings are published on the arXiv preprint server.

More information: Ke Li et al, GazeTrak: Exploring Acoustic-based Eye Tracking on a Glass Frame, arXiv (2024). DOI: 10.48550/arxiv.2402.14634