May 20, 2020 feature

A deep-learning-enhanced e-skin that can decode complex human motions

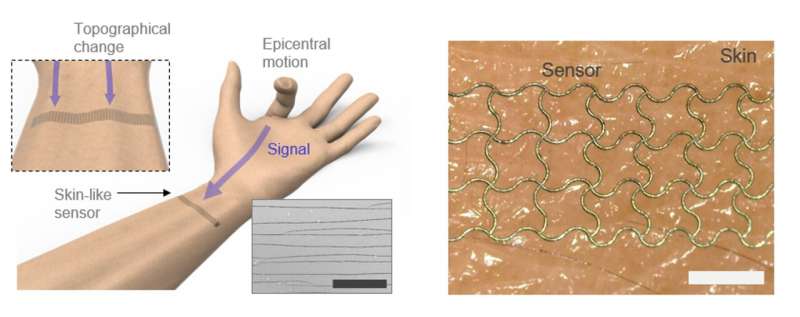

Researchers at Seoul National University and Korea Advanced Institute of Science and Technology (KAIST) have recently developed a sensor that can act as an electronic skin and integrated it with a deep neural network. This deep learning-enhanced e-skin system, presented in a paper published in Nature Communications, can capture human dynamic motions, such as rapid finger movements, from a distance.

The new system stems from an interdisciplinary collaboration that involves experts in the fields of mechanical engineering and computer science. The two researchers who led the recent study are Seung Hwan Ko, a professor of mechanical engineering at Soul National University and Sungho Jo, a professor at KAIST School of Computing.

For several years, Prof. Ko had been trying to develop highly sensitive strain sensors by generating cracks in metal nanoparticle films using laser technology. The resulting sensor arrays were then applied to a virtual reality (VR) glove designed to detect the movements of people's fingers.

"My lab typically used at least five to 10 strain sensors to predict the accurate hand motion (at least one to two sensors for each finger), because the required number of strain sensors increases as the complexity of the target system increases," Prof. Ko said. "A few years ago, I started asking myself the following question: Can we accurately predict hand motion with only one single strain sensor instead of using many sensors? Initially, this appeared to be a dumb question, because it was almost impossible to tell what finger the signal from a strain sensor came from."

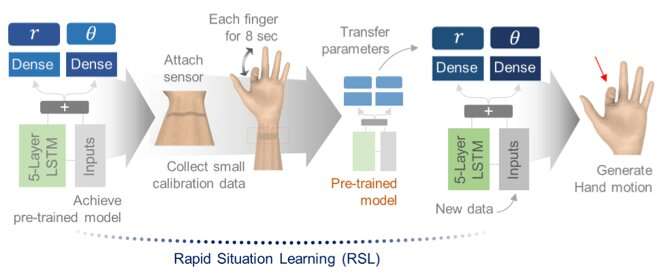

While Prof. Ko was trying to develop a single strain sensor capable of accurately predicting people's hand motions, Prof. Jo was investigating strategies to integrate machine learning techniques with state-of-the-art sensors. Prof. Jo believed that sequential sensor patterns generated by people's finger motion could be analyzed using machine learning, even if these signals were detected by a single sensor.

"We realized that if we were able to utilize these patterns using machine learning, we could clearly decouple multiple different behaviors observed by a single sensor," Prof. Jo said. "After close collaboration, we were able to develop a single deep-learned sensor that can predict complex hand motions. "

When mounted on a user's wrist, the sensor developed by Prof. Ko, Prof. Jo and their colleagues can detect electrical signals produced by his/her hand movements, while also identifying what finger these signals are coming from. In contrast with more conventional e-skin systems, which require at least one sensor for each finger to accurately predict a person's hand motions, the new deep learning-powered sensor also works well when used in isolation.

"Conventional e-skins needs at least five to 10 strain sensors to accurately predict hand motions, with the required number of strain sensors increasing as the complexity of a target system increases," Prof. Ko told TechXplore. "The deep learned electronic skin sensor we developed, on the other hand, can achieve this job with only a single sensor."

Rather than simply fitting the signals detected by the sensor using more conventional approaches, the researchers used a deep learning model to analyze signal patterns over time and ultimately uncover the finger motions underlying the collected data. Essentially, Prof. Ko, Prof. Jo and their colleagues proved that when combined with deep learning techniques, a single sensor could achieve results comparable to those of several sensors.

"Our results imply that we can achieve complex detection with a lower number of sensors," Prof. Jo said. "This will dramatically simplify the systems needing sensors for complex detection. We also anticipate that the new approach will facilitate the indirect remote measurement of human motions, which is applicable to wearable VR/AR systems."

In initial evaluations, the e-skin system developed by this team of researchers achieved highly promising results, successfully detecting and decoding complex finger motions in real-time, while also operating consistently well regardless of its position on a user's wrist. In the future, the sensor could have a number of interesting applications, both in the development of robots and wearable devices, such as fitness trackers. Interestingly, when placed on a user's pelvis, the same system can also decode gait motions (i.e., walking styles), thus it could be used to create small and efficient motion tracking devices.

"In this research, we used the machine learned sensor to decode hand motions," Prof. Ko said. "In the near future, however, we plan to build on this research to achieve more complex body motion prediction, such as that of legs, arms and perhaps even the entire body."

More information: Kyun Kyu Kim et al. A deep-learned skin sensor decoding the epicentral human motions, Nature Communications (2020). DOI: 10.1038/s41467-020-16040-y

© 2020 Science X Network