Robot uses tactile sign language to help deaf-blind people communicate independently

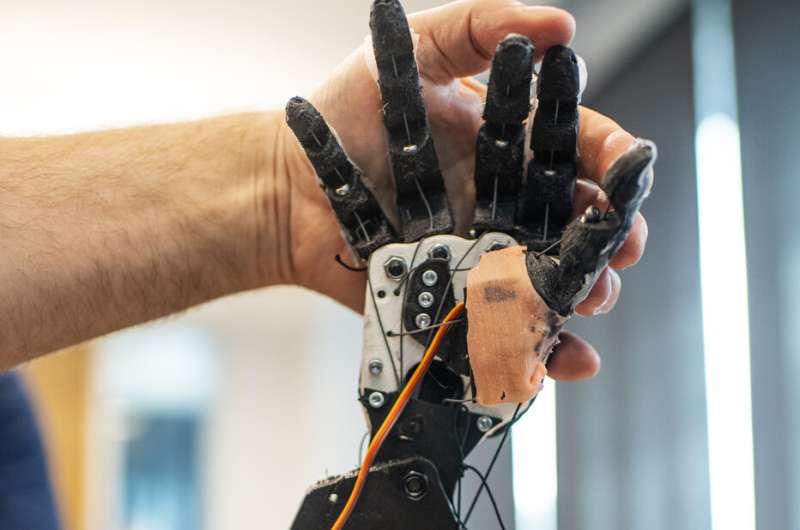

Jaimi Lard gets into position. She cups her left hand over the device, spreading her fingers across the top of it, and raises her right hand. When Lard is ready, Samantha Johnson presses a few keys on a laptop wired to the robot and then, with a mechanical buzzing sound filling the air, the device begins to move.

When the whirring stops, three of the fingers of the robotic hand are pointing directly upward, while the tips of the thumb and pointer finger are touching, forming a circle. Lard uses her left hand to feel what position the robotic hand is in, then moves her right hand to make the same sign.

"F, perfect!" Johnson exclaims.

The robotic arm, built by Johnson, a bioengineering graduate student at Northeastern, is designed to produce tactile sign language in order to enable more independence for people who, like Lard, are both deaf and blind. Lard is one of the members of the deaf-blind community that is helping Johnson test the robot and giving her feedback on how it could be improved.

"I'm very excited for this new opportunity to help improve this robot," Lard says, through an interpreter.

Johnson came up with the idea for a tactile sign language robot, which she has named TATUM, Tactile ASL Translational User Mechanism, as an undergraduate student at Northeastern. She took a course in sign language as a sophomore and through that class, Johnson interacted with people from the Deaf-Blind Contact Center in Allston, Massachusetts, to work on her skills.

People who are deaf can communicate with their hearing friends and family through visually signed language, but for people who are both deaf and blind, language must be something they can touch. So that means that people who are both deaf and blind often need an interpreter to be present with them in-person for interactions with others who do not know American Sign Language, so they can feel what shape their hands are making.

"When I was watching the interpreter sign, I asked, 'How do you communicate without the interpreter?'" and the answer was simply, "'We don't,'" Johnson recalls.

The goal of developing a tactile sign language robot, Johnson says, is to create something that can be used for someone who relies on American Sign Language as their primary communication language to be able to communicate independently, without relying on another person to interpret. She sees the robot as potentially helpful at home, at a doctor's office, or in other settings where someone might want to have private communication or an interpreter might not be readily available.

Johnson is still in the early stages of developing the robot, working on it as her thesis with Chiara Bellini, assistant professor of bioengineering at Northeastern, as her advisor. Right now, Johnson is focusing on the letters of the American Manual Alphabet, and training the robot to finger-spell some basic words.

The ultimate goal is for the robot to be fluent in American Sign Language, so that the device can connect to text-based communication systems such as email, text messages, social media, or books. The idea is that the robot would be able to sign those messages or texts to the user. Johnson would also like to make the robot customizable, as, just like in any other language, there are unique signs, words, or phrases used in different regions and some signs that mean different things depending on the cultural context.