January 18, 2022 feature

Iconary: A pictionary-like game to improve the communication skills of AI agents

While artificial intelligence (AI) agents have become increasingly skilled at communicating with humans, they still struggle with several aspects of language, including complex semantics. The term semantics refers to the area of linguistics that relates to the meaning associated with specific words or logical connections between different concepts.

A few years ago, researchers at Allen Institute for AI developed a game called Iconary, which is designed to improve the ability of AI techniques to communicate and make connections between different objects. In a recent paper pre-published on arXiv and presented at last year's ENMLP conference, the researchers introduced a more advanced version of the game and trained machine learning algorithms to play against each other or with humans.

"Our paper is based on a project at AI2 aimed at training models to play Iconary, a Pictionary-based game we created, where a player has to guess what another player is drawing," Christopher Clark, one of the researchers who carried out the study, told TechXplore. "The project started a couple years ago, but the paper was only recently published and presented at a conference, outlining a more challenging version of the game while using modern machine learning methods."

The overall aim of the recent work by Clark and his colleagues was to create a game that could be used as a testbed for AI agents, similarly to how researchers used the games of go and chess in the past. Instead of building a game in which players compete against each other, however, the researchers wanted to improve the ability of artificial agents to cooperate with humans and understand visual communication (i.e., images and drawings).

Iconary resembles the renowned game Pictionary, in which one player tries to convey a specific object or idea through drawings, while other players try to guess what it is. The game created by Clark and his colleagues works very similarly, with one player, dubbed the 'guesser', needs to guess what another player, 'the drawer', is drawing.

"Initially, the drawer sees a short phrase (like 'holding a textbook') and then has to draw that phrase by selecting icons for a list of icons and then arranging them on a canvas," Clark said. "When they are done, the guesser is shown the drawing and tries to guess the starting phrase."

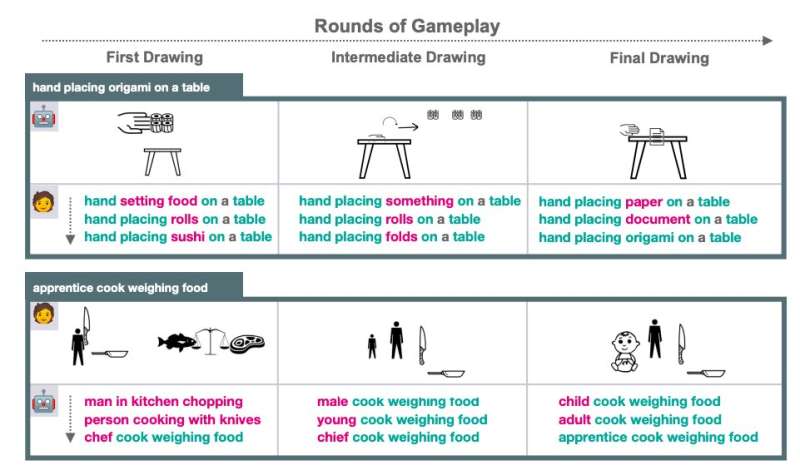

Just like in Pictionary, if a guesser playing Iconary figures out what the drawer was trying to convey his or her team wins. If his guess is wrong, however, he can choose whether to keep guessing or give up. If he gives up, the drawer can change his drawing, taking the other player's guesses into account, then the cycle repeats. If after 4 minutes the guesser was unable to guess the meaning behind the drawing, the team loses the round.

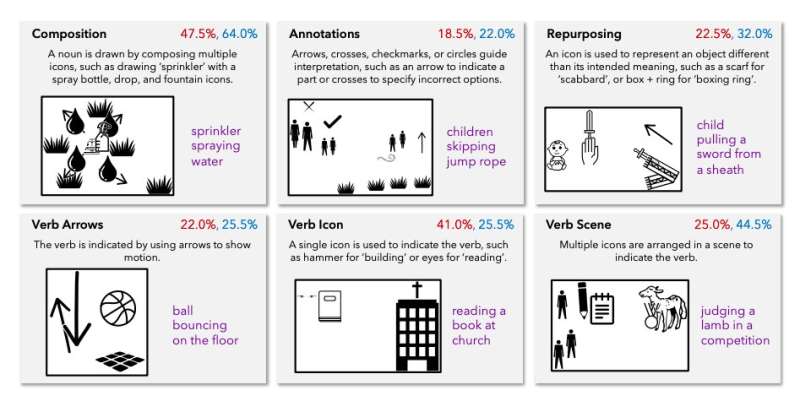

"What makes Iconary hard is that many nouns and verbs will not have icons that directly represent them, so drawers have to come up with ways to indirectly indicate them to the guesser," Clark explained. "For example, there is no 'textbook' icon, so Drawers need to indicate that word indirectly by, for example, combining a book and a school bus icon. This makes understanding Iconary drawings very different from understanding photographic images."

Instead of literally depicting a scene, drawings produced while playing Iconary hold implicit meanings and are not as easy for AIs to interpret. They are based on semantic strategies such as visual metaphors (e.g., a book and a school bus to convey 'textbook'), annotations (e.g., an arrow and a door to convey 'door handle'), referencing canonical examples (e.g., showing a lit and an unlit lightbulb to convey 'turn off'), and other indirect communication strategies.

While visual communication and complex semantic processing are innate skills for humans, AI agents typically struggle with them. Iconary is a valuable platform to test whether AIs could be taught these skills over time.

"Iconary also has some interesting game-playing elements, since good players should adjust their approaches to guessing/drawing based on how the other player is behaving, for example by adjusting drawings to head-off misconceptions that a guesser has or looking at what key changes the drawer is making to figure out what to focus on," Clark said.

As part of their recent study, the researchers trained AI algorithms on over 55,000 Iconary rounds between human players. Subsequently, when they tested the algorithms' performance, they achieved promising results. Nonetheless, human players often outperformed the AIs, particularly in their ability to convey objects or ideas through drawings.

"A particular challenge for our setup is that we tested the model on phrases that contained words that the model did not see in the human/human games we use as training data, which means models could not play the game just by re-using/recognizing drawing strategies observed during training," Clark said. "Overall, we were able to show we can train AIs that were reasonably good at understanding the human authored drawings, although not quite as good as skilled humans."

In the future, Iconary could prove to be a useful testbed for AI algorithms, allowing researchers to evaluate their ability to semantically connect texts and drawings. So far, Clark and his colleagues found that AI agents are significantly better at guessing implicitly communicated concepts than conveying them through drawings.

"Building drawer AIs was harder, but we did see some cases where our model was able to construct effective drawings that were novel, meaning they were unlike ones observed in the training data," Clark said. "This shows the AIs could apply world-knowledge to the drawing task and understand drawing at a deeper level then just memorizing drawing strategies humans used in the training data. I found the drawer results more interesting because I think drawing requires more creativity and is a more novel task than guessing."

Today, humans use visual communication in several different contexts, for instance to interpret street signs, instructions to build furniture or emojis. Iconary could thus also be a valuable tool for creating AI systems that are better at understanding these everyday visual forms of communication.

"I am not currently working on follow-up work, but I think training models to be better drawers is a very interesting challenge," Clark said. "Our AI drawers are still significantly worse than human drawers."

More information: Iconary: a Pictionary-based game for testing multimodal communication with drawings and text. arXiv:2112.00800 [cs.CL]. arxiv.org/abs/2112.00800

© 2022 Science X Network